Face recognition improved by face aligning

TEXT:

Face recognition consists of these steps:

- Create training set for face recognition

- Load training set for face recognition

- Train faces and create model

- Capture/load image where you want to recognize people

- Find face/s

- Adjust the image for face recognition (greyscale, crop, resize, rotate …)

- Use trained model for face recognition

- Display result

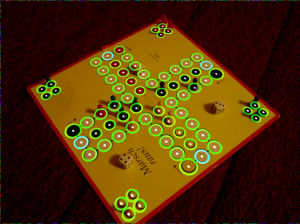

Creating training set

To recognize faces you first need to train faces and create model for each person you want to be recognized. You can do this by manually cropping faces and adjusting them, or you can just save adjusted face from step 6 with name of the person. It is simple as that. I store this information in the name of file, which may not be the best option so there is room for improvement here.

string result;

unsigned long int sec = time(NULL);

result << “facedata/” << user << “_” << sec << “.jpg”;

imwrite(result, croppedImage); capture = false;

As you can see I add timestamp to the name of the image so they have different names. And string user is read from console just like this:

user.clear(); cin >> user;

Loading training set

Working with directories in windows is a bit tricky because String is not suitable since directories and files can contain different diacritics and locales. Working with windows directories in c++ requires the use of WString for file and directories names.

vector get_all_files_names_within_folder(wstring folder)

{

vector names;

TCHAR search_path[200];

StringCchCopy(search_path, MAX_PATH, folder.c_str());

StringCchCat(search_path, MAX_PATH, TEXT(“\\*”));

WIN32_FIND_DATA fd;

HANDLE hFind = ::FindFirstFile(search_path, &fd);

if (hFind != INVALID_HANDLE_VALUE)

{

do

{

if (!(fd.dwFileAttributes & FILE_ATTRIBUTE_DIRECTORY))

{

wstring test = fd.cFileName;

string str(test.begin(), test.end());

names.push_back(str);

}

} while (::FindNextFile(hFind, &fd));

::FindClose(hFind);

}

return names;

}

void getTrainData(){

wstring folder(L”facedata/”);

vector files = get_all_files_names_within_folder(folder);

string fold = “facedata/”;

int i = 0;

for (std::vector::iterator it = files.begin(); it != files.end(); ++it) {

images.push_back(imread(fold + *it, 0));

labelints.push_back(i);

string str = *it;

unsigned pos = str.find(“_”);

string str2 = str.substr(0, pos);

labels.push_back(str2);

i++;

}

}

I create 3 sets for face recognition and mapping. variable labelints is used in face recognition model, then its value serves as index for finding proper string representation of person and his training image.

Train faces and create model

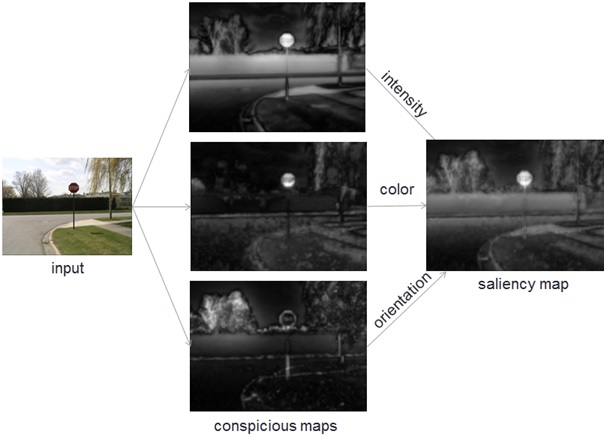

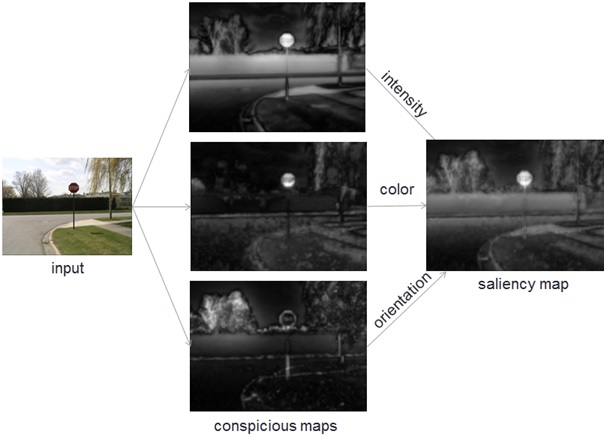

Face recognition in opencv has three available implementations: Eiegenfaces, Fisherfaces and Local Binary Patterns Histograms. In this stage you choose which one you want to use. I found out that LBPH has the best results but is really slow. You can find out more about which one to choose in opencv’s face recognition tutorial.

void learnFacesEigen(){

model = createEigenFaceRecognizer();

model->train(images, labelints);

}

void learnFacesFisher(){

model = createFisherFaceRecognizer();

model->train(images, labelints);

}

void learnFacesLBPH(){

model = createLBPHFaceRecognizer();

model->train(images, labelints);

}

Capture/load image where you want to recognize people

You can load image from file as was showed before on training set or you can capture frames from your webcam. Some webcams are a bit slow and you might end up adding some sleep between initialising the camera and capturing frames. If you don’t get any frames try increasing the sleep or change the stream number.

VideoCapture stream1(1);

//– 2. Read the video stream

if (!stream1.isOpened()){

cout << “cannot open camera”;

}

Sleep(2000);

while (true)

{

bool test = stream1.read(frame);

if (test)

{

detectAndDisplay(frame, capture, user, recognize);

}

else

{

printf(” –(!) No captured frame — Break!”); break;

}

}

stream1.release();

You can play with number in stream1(number) to choose the webcam you need or pick -1 to open a window with webcam selection and 0 is default.

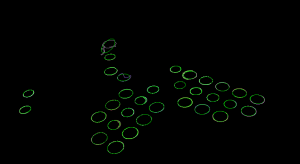

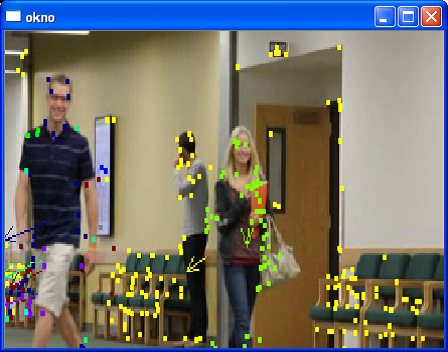

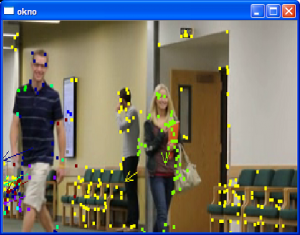

Find face/s

Facedetection in opencv is usually done by using haar cascades. You can learn more about it in opencv’s Cascade Classifier post

Code is explained there so I will skip this part.

Adjust the image for face recognition

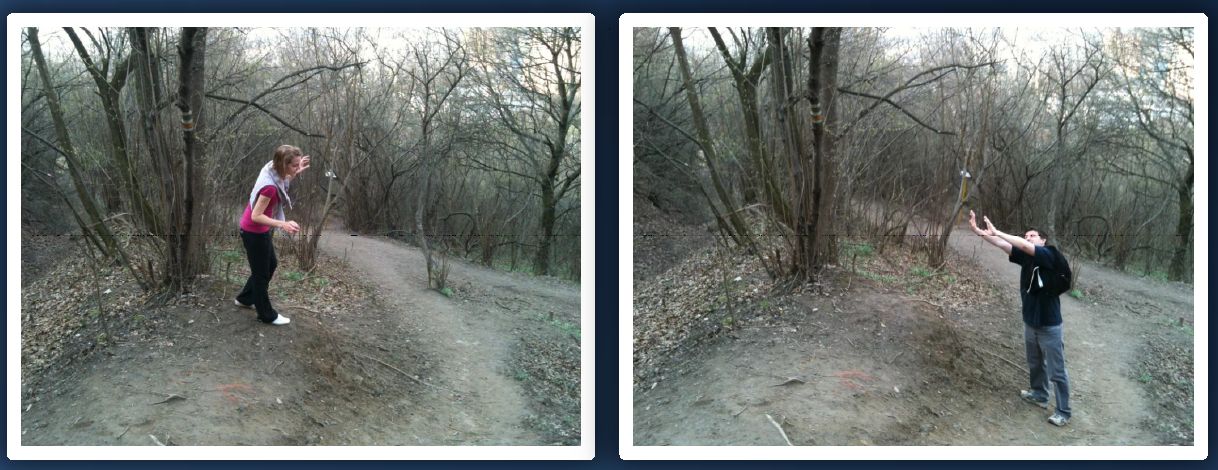

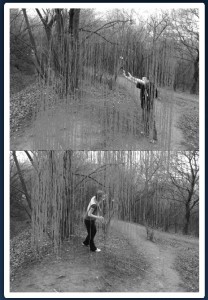

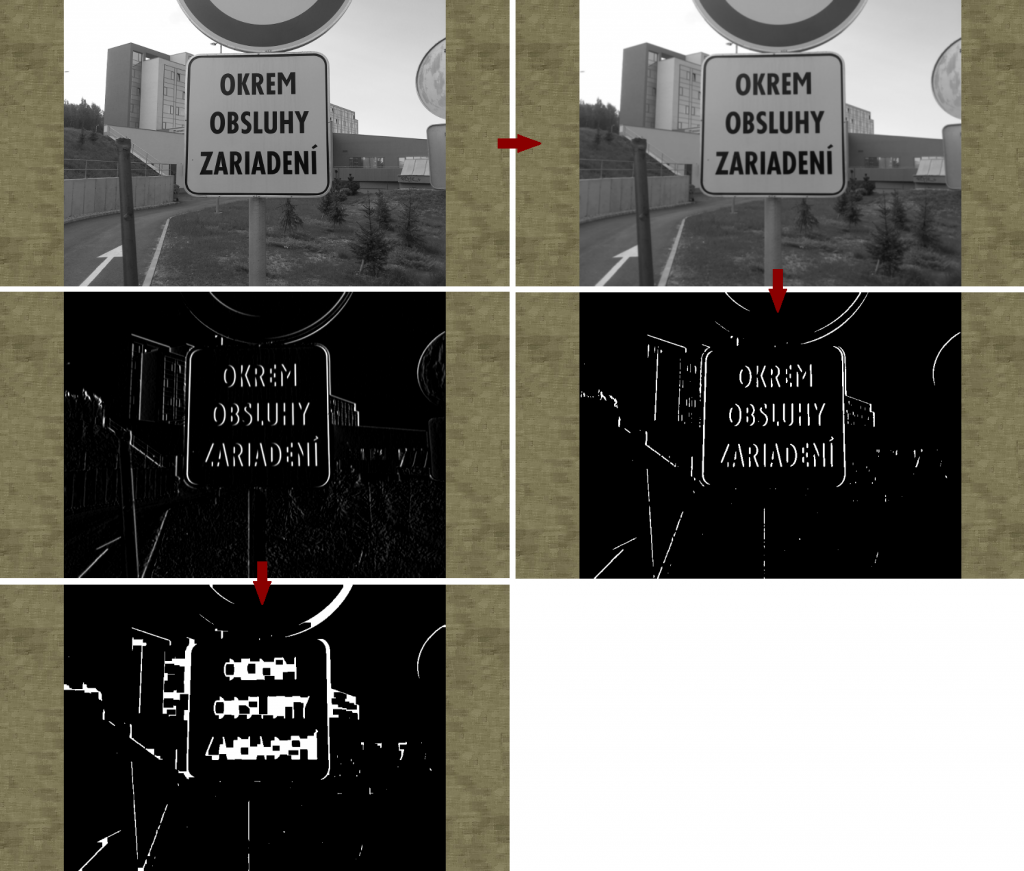

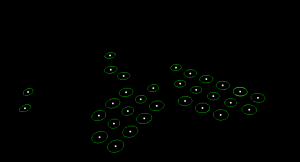

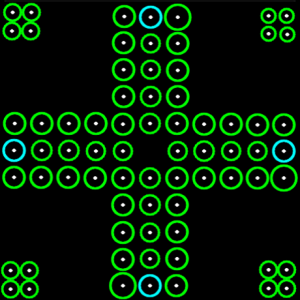

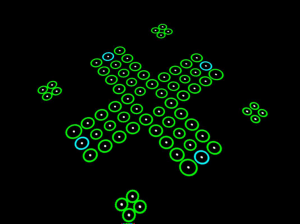

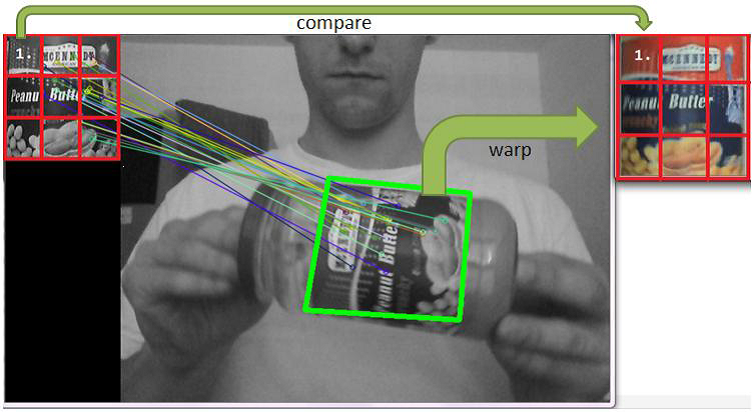

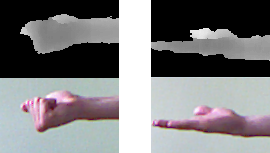

The most interesting part and the part where there is still much to do is this one. Face recognition in OpenCV works only on images with same size and greyscale. The more aligned faces are the better face recognition results are. So we need to convert the image to greyscale.

cvtColor(frame, frame_gray, CV_BGR2GRAY);

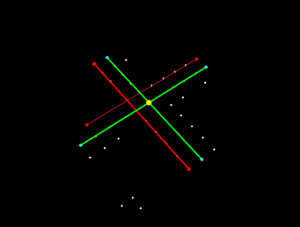

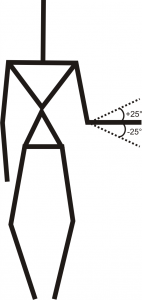

Then rotate face to vertical position so it is aligned perfectly. I do this by computing height difference between the eyes and when eyes are shut I use histogram of oriented gradients for nose to get its orientation. First thing first we need to find eyes on picture. I cropped the image to just the face part so the classifier has easier job finding eyes and doesn’t throw false positives.

int tlY = faces[i].y;

if (tlY < 0){ tlY = 0; } int drY = faces[i].y + faces[i].height; if (drY>frame.rows)

{

drY = frame.rows;

}

Point tl(faces[i].x, tlY);

Point dr(faces[i].x + faces[i].width, drY);

Rect myROI(tl, dr);

Mat croppedImage_original = frame(myROI);

I tried different crops. But the best one seems to be the one with dropped out chin and a little bit of forehead which is defaultly recognized by OpenCV’s face haar classifier. Then I use different classifier to find the eyes and I decide from x position which one is left, which one is right.

eye_cascade.detectMultiScale(croppedImageGray, eyes, 1.1, 3, CV_HAAR_DO_CANNY_PRUNING, Size(croppedImageGray.size().width*0.2, croppedImageGray.size().height*0.2));

int eyeLeftX = 0;

int eyeLeftY = 0;

int eyeRightX = 0;

int eyeRightY = 0;

for (size_t f = 0; f < eyes.size(); f++)

{

int tlY2 = eyes[f].y + faces[i].y;

if (tlY2 < 0){ tlY2 = 0; } int drY2 = eyes[f].y + eyes[f].height + faces[i].y; if (drY2>frame.rows)

{

drY2 = frame.rows;

}

Point tl2(eyes[f].x + faces[i].x, tlY2);

Point dr2(eyes[f].x + eyes[f].width + faces[i].x, drY2);

if (eyeLeftX == 0)

{

//rectangle(frame, tl2, dr2, Scalar(255, 0, 0));

eyeLeftX = eyes[f].x;

eyeLeftY = eyes[f].y;

}

else if (eyeRightX == 0)

{

////rectangle(frame, tl2, dr2, Scalar(255, 0, 0));

eyeRightX = eyes[f].x;

eyeRightY = eyes[f].y;

}

}

// if lefteye is lower than right eye swap them

if (eyeLeftX > eyeRightX){

croppedImage = cropFace(frame_gray, eyeRightX, eyeRightY, eyeLeftX, eyeLeftY, 200, 200, faces[i].x, faces[i].y, faces[i].width, faces[i].height);

}

else{

croppedImage = cropFace(frame_gray, eyeLeftX, eyeLeftY, eyeRightX, eyeRightY, 200, 200, faces[i].x, faces[i].y, faces[i].width, faces[i].height);

}

After that I rotate the face by height difference of eyes, drop it and resize it to the same size all training data is.

Mat dstImg;

Mat crop;

if (!(eyeLeftX == 0 && eyeLeftY == 0))

{

int eye_directionX = eyeRightX – eyeLeftX;

int eye_directionY = eyeRightY – eyeLeftY;

float rotation = atan2((float)eye_directionY, (float)eye_directionX) * 180 / PI;

if (rotation_def){

rotate(srcImg, rotation, dstImg);

}

else {

dstImg = srcImg;

}

}

else

{

if (noseDetection)

{

Point tl(faceX, faceY);

Point dr((faceX + faceWidth), (faceY + faceHeight));

Rect myROI(tl, dr);

Mat croppedImage_original = srcImg(myROI);

Mat noseposition_image;

resize(croppedImage_original, noseposition_image, Size(200, 200), 0, 0, INTER_CUBIC);

float rotation = gradienty(noseposition_image);

if (rotation_def){

rotate(srcImg, rotation, dstImg);

}

else {

dstImg = srcImg;

}

}

else{

dstImg = srcImg;

}

}

std::vector faces;

face_cascade.detectMultiScale(dstImg, faces, 1.1, 3, CV_HAAR_DO_CANNY_PRUNING, Size(dstImg.size().width*0.2, dstImg.size().height*0.2));

for (size_t i = 0; i < faces.size(); i++)

{

int tlY = faces[i].y;

if (tlY < 0){ tlY = 0; } int drY = faces[i].y + faces[i].height; if (drY>dstImg.rows)

{

drY = dstImg.rows;

}

Point tl(faces[i].x, tlY);

Point dr(faces[i].x + faces[i].width, drY);

Rect myROI(tl, dr);

Mat croppedImage_original = dstImg(myROI);

Mat croppedImageGray;

resize(croppedImage_original, crop, Size(width, height), 0, 0, INTER_CUBIC);

imshow(“test”, crop);

}

As you can see I use another face detection to find cropping area. It is probably not the best option and it is not configured for more than one face, but after few enhancements it is suffitient. The next part is rotation by nose. This is purely experimental and doesn’t give very good results. I had to use average of 4 frames to determine the rotation and it is quite slow.

int plotHistogram(Mat image)

{

Mat dst;

/// Establish the number of bins

int histSize = 256;

/// Set the ranges

float range[] = { 0, 256 };

const float* histRange = { range };

bool uniform = true; bool accumulate = false;

Mat b_hist, g_hist, r_hist;

/// Compute the histograms:

calcHist(&image, 1, 0, Mat(), b_hist, 1, &histSize, &histRange, uniform, accumulate);

int hist_w = 750; int hist_h = 500;

int bin_w = cvRound((double)hist_w / histSize);

Mat histImage(hist_h, hist_w, CV_8UC3, Scalar(0, 0, 0));

/// Normalize the result to [ 0, histImage.rows ]

normalize(b_hist, b_hist, 0, histImage.rows, NORM_MINMAX, -1, Mat());

int sum = 0;

int max = 0;

int now;

int current = 0;

for (int i = 1; i < histSize; i++)

{

now = cvRound(b_hist.at(i));

// ak su uhly v rozsahu 350-360 alebo 0-10 dame ich do suctu

if ((i < 5))

{

max += now;

current = i;

}

}

return max;

}

float gradienty(Mat frame)

{

Mat src, src_gray;

int scale = 1;

int delta = 0;

src_gray = frame;

Mat grad_x, grad_y;

Mat abs_grad_x, abs_grad_y;

Mat magnitudes, angles;

Mat bin;

Mat rotated;

int max = 0;

int uhol = 0;

for (int i = -50; i < 50; i++) { rotate(src_gray, ((double)i / PI), rotated); Sobel(rotated, grad_x, CV_32F, 1, 0, 9, scale, delta, BORDER_DEFAULT); Sobel(rotated, grad_y, CV_32F, 0, 1, 9, scale, delta, BORDER_DEFAULT); cartToPolar(grad_x, grad_y, magnitudes, angles); angles.convertTo(bin, CV_8U, 90 / PI); Point tl((bin.cols / 2) – 10, (bin.rows / 2) – 20); Point dr((bin.cols / 2) + 10, (bin.rows / 2)); Rect myROI(tl, dr); Mat working_pasik = bin(myROI); int current = 0; current = plotHistogram(working_pasik); if (current > max)

{

max = current;

uhol = i;

}

}

noseQueue.push_back(uhol);

int suma = 0;

for (std::list::iterator it = noseQueue.begin(); it != noseQueue.end(); it++)

{

suma = suma + *it;

}

int priemer;

priemer = (int)((double)suma / (double)noseQueue.size());

if (noseQueue.size() > 3)

{

noseQueue.pop_front();

}

return priemer;

}

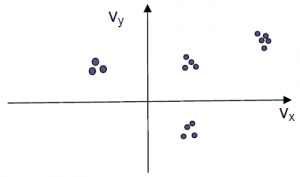

Main idea behind this is to compute vertical and horizontal sobel for nose part and find the angle between them. Then I determine which angle is dominant with help of histogram and I use its peak value in finding the best rotation of face. This part can be improved by normalizing the histogram on start and then using just values from one face rotation angle to determine the angle between vertical position and current angle.

Rotation is done simply by this function

void rotate(cv::Mat& src, double angle, cv::Mat& dst)

{

int len = max(src.cols, src.rows);

cv::Point2f pt(len / 2., len / 2.);

cv::Mat r = cv::getRotationMatrix2D(pt, angle, 1.0);

cv::warpAffine(src, dst, r, cv::Size(len, len));

}

And in the code earlier I showed you how to crop the face and then resize it to default size.

Use trained model for face recognition

We now have model of faces trained and enhanced image of face we want to recognize. Tricky part here is determining when the face is new (not in training set) and when it is recognized properly. So I created some sort of treshold for distance and found out that it lies between 11000 and 12000.

int predictedLabel = -1;

double predicted_confidence = 0.0;

model->set("threshold", treshold);

model->predict(croppedImage, predictedLabel, predicted_confidence);

Display result

After we found out whether the person is new or not we show results:

if (predictedLabel > -1)

{

text = labels[predictedLabel];

putText(frame, text, tl, fontFace, fontScale, Scalar::all(255), thickness, 8);

}