This example shows how to segment objects using OpenCV and Kinect for XBOX 360. The depth map retrieved from Kinect sensor is aligned with color image and used to create segmentation mask.

Functions used: convertTo, floodFill, inRange, copyTo

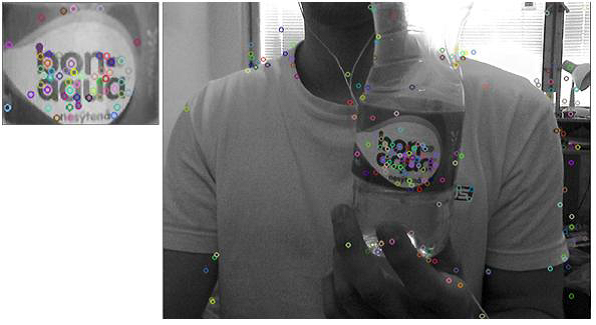

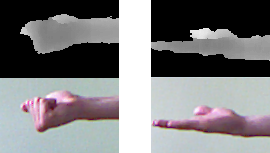

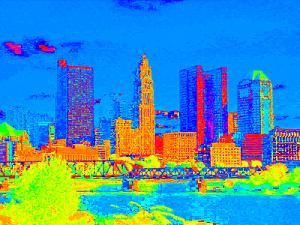

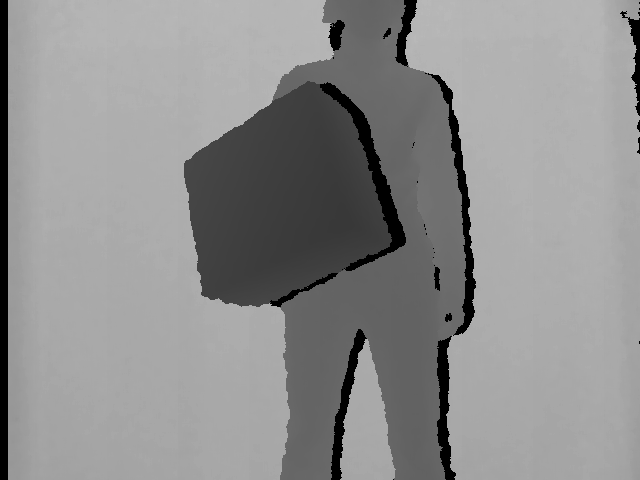

Inputs

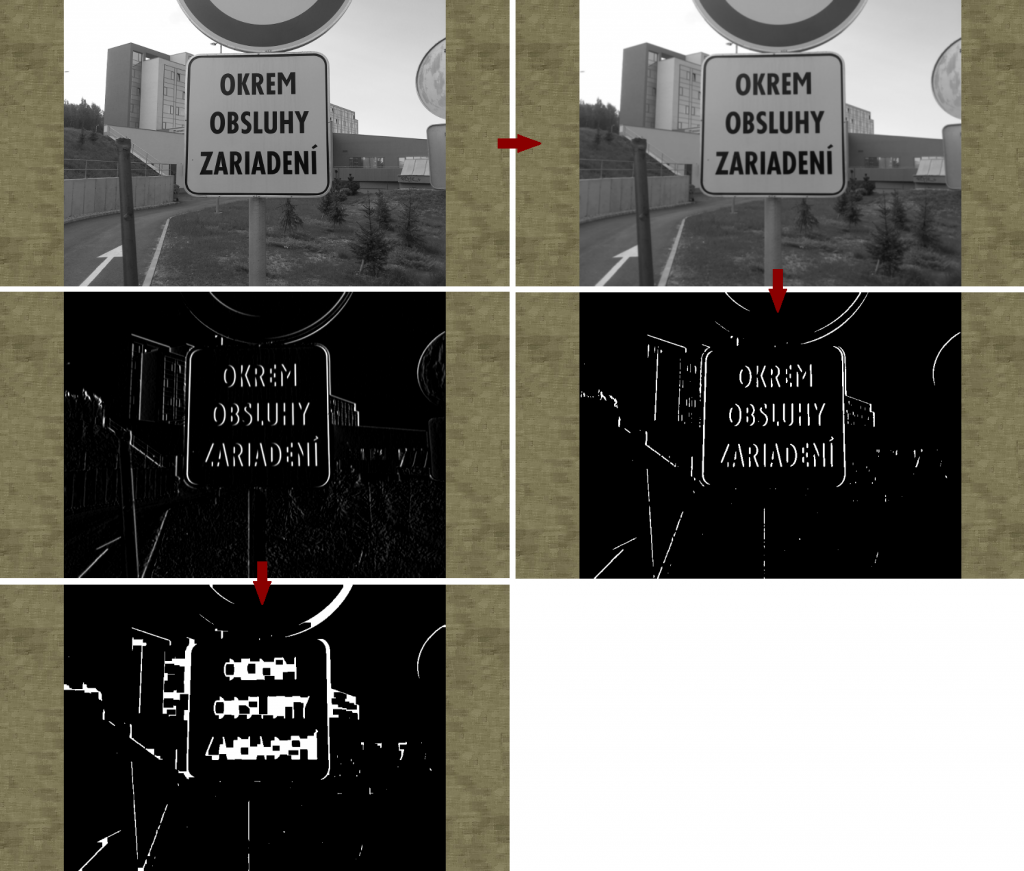

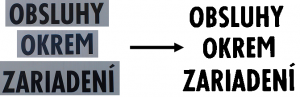

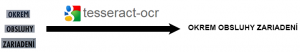

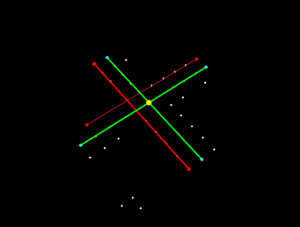

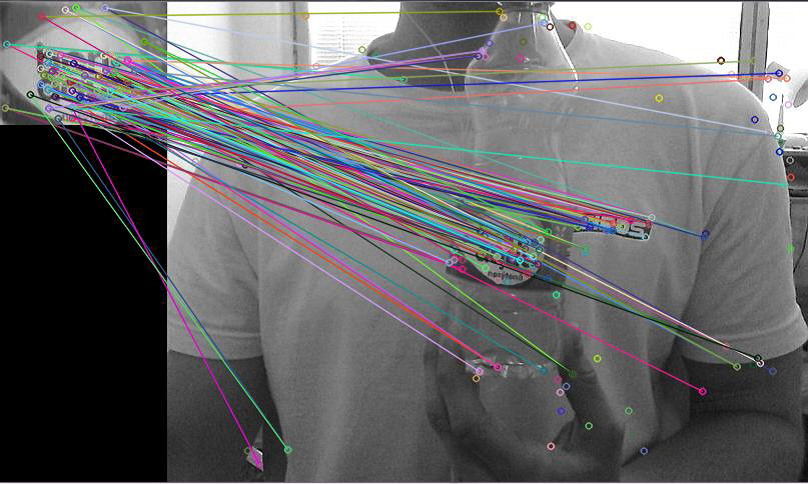

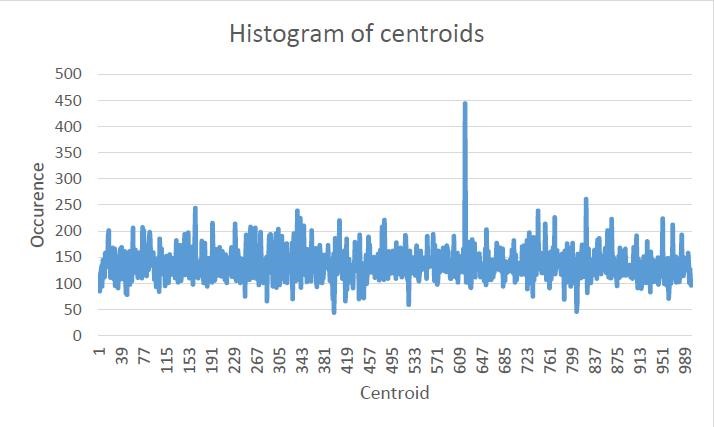

The process

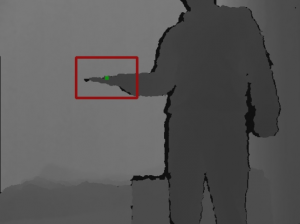

- Retrieve color image and depth map

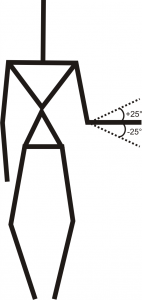

- Compute coordinates of depth map pixels so they fit to color image

- Align depth map with color image

cv::Mat depth32F; depth16U.convertTo(depth32F, CV_32FC1); cv::inRange(depth32F, cv::Scalar(1.0f), cv::Scalar(1200.0f), mask);

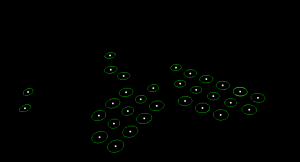

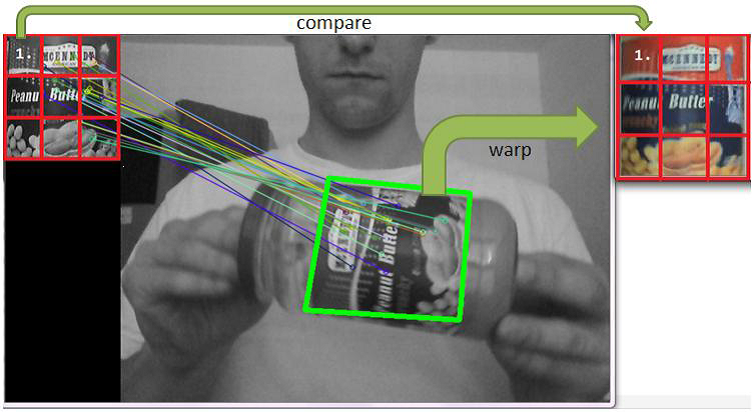

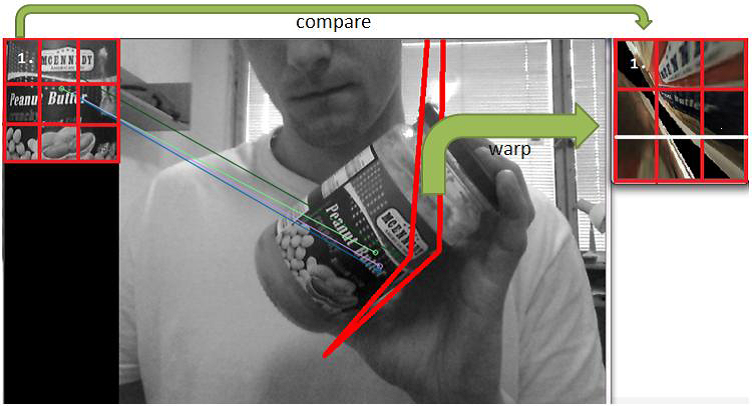

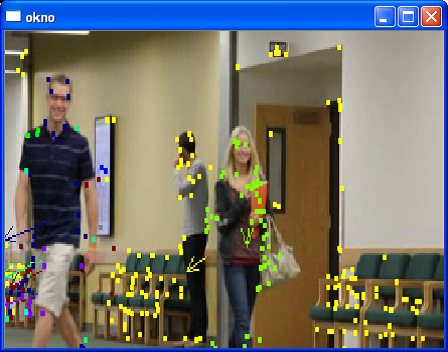

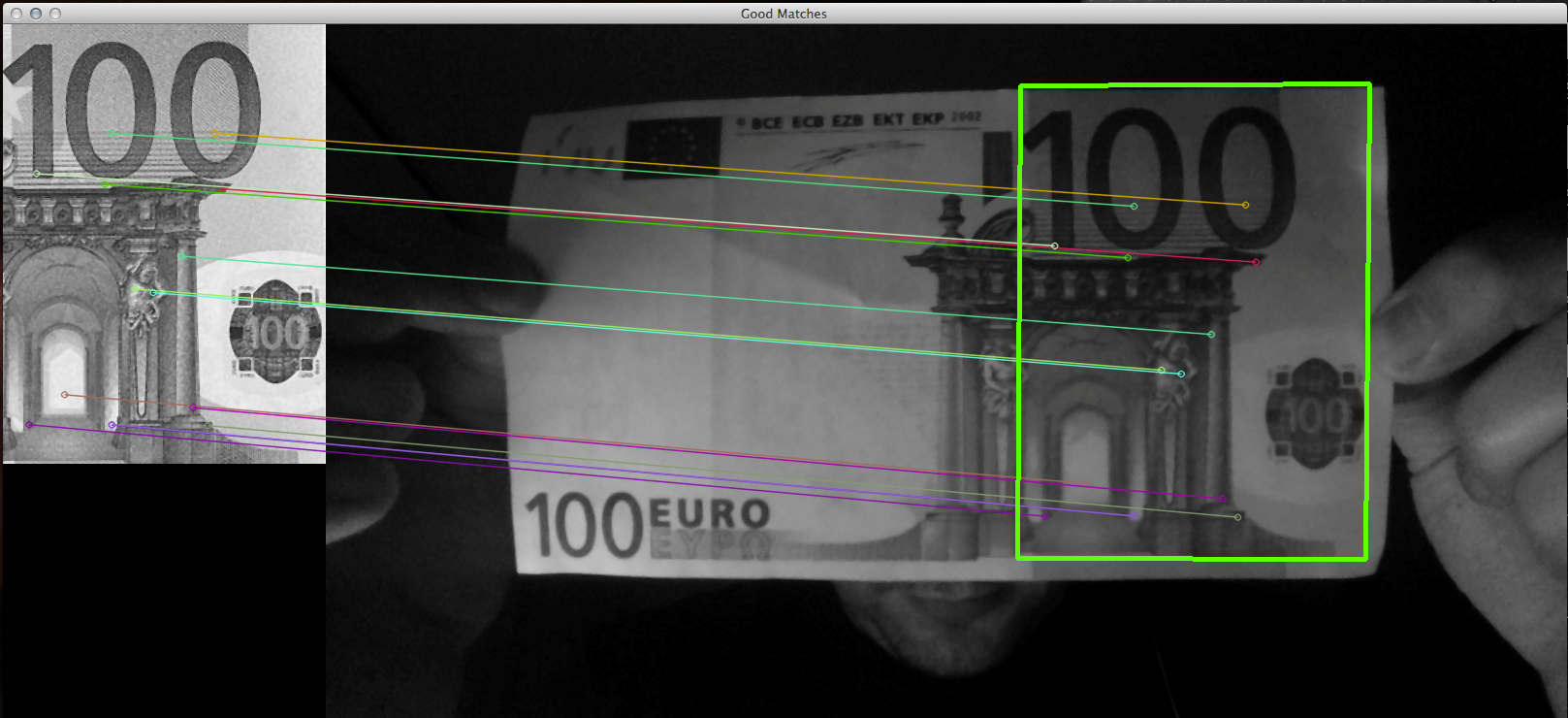

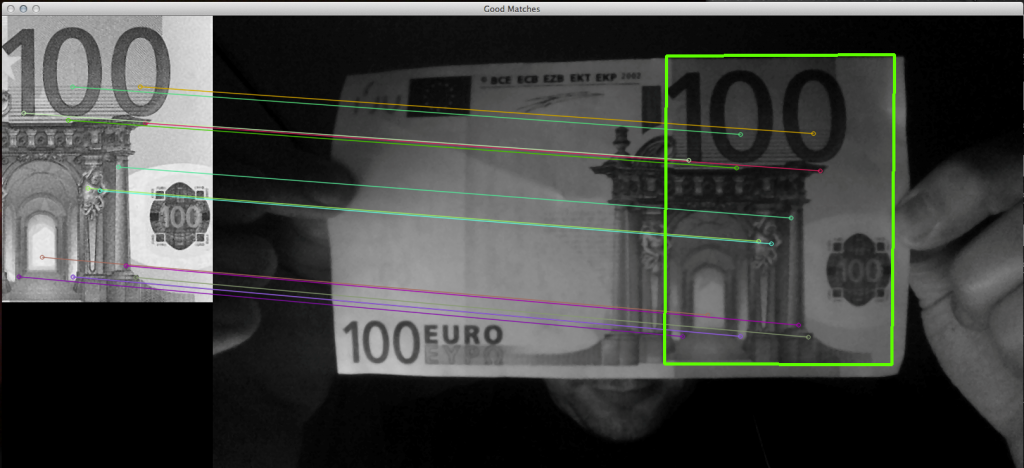

- Find seed point in aligned depth map

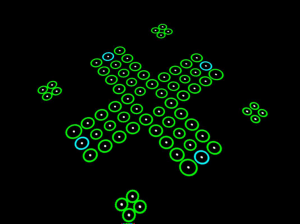

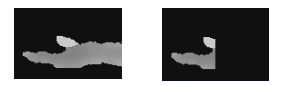

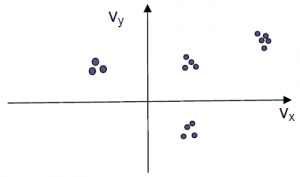

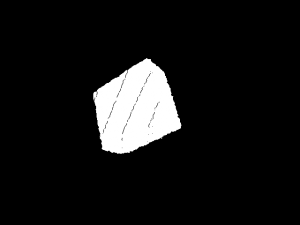

- Perform flood fill operation from seed point

cv::Mat mask(cv::Size(colorImageWidth + 2, colorImageHeight + 2), CV_8UC1, cv::Scalar(0)); floodFill(depth32F, mask, seed, cv::Scalar(0.0f), NULL, cv::Scalar(20.0f), cv::Scalar(20.0f), cv::FLOODFILL_MASK_ONLY);

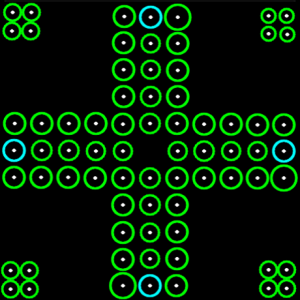

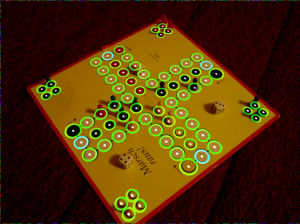

- Make a copy of color image using mask

cv::Mat color(cv::Size(colorImageWidth, colorImageHeight), CV_8UC4, (void *) colorImageFrame->pFrameTexture, colorImageWidth * 4); color.copyTo(colorSegment, mask(cv::Rect(1, 1, colorImageWidth, colorImageHeight)));

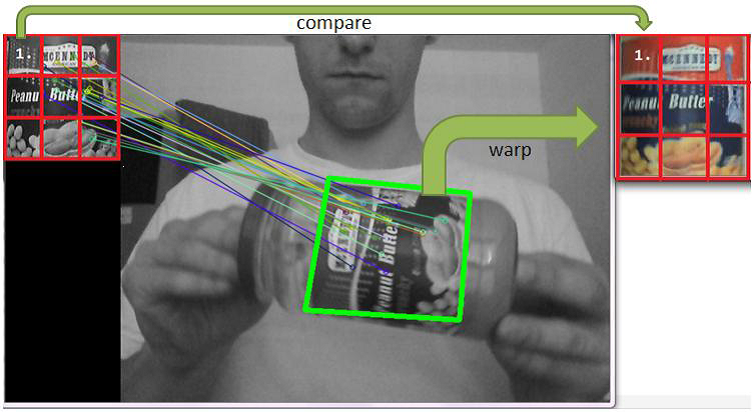

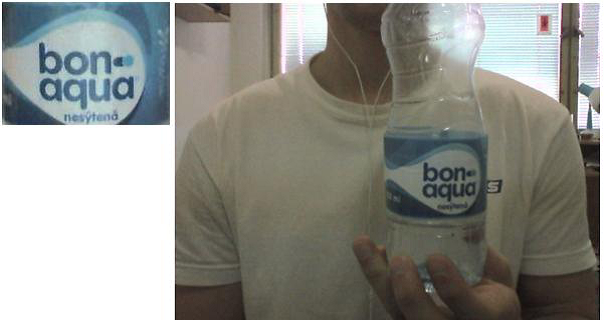

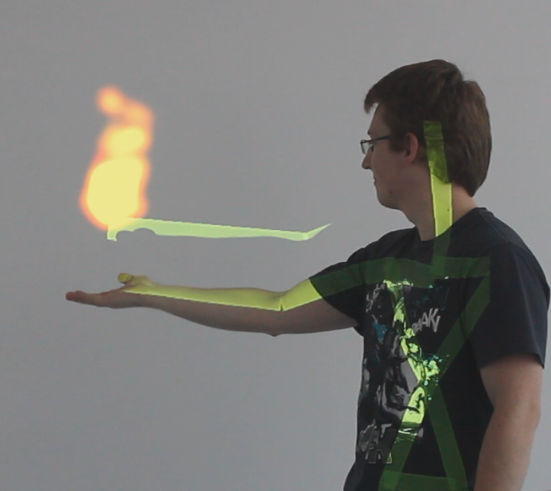

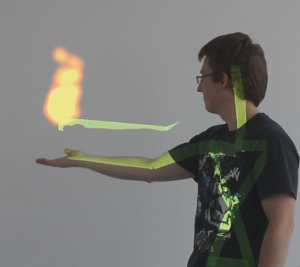

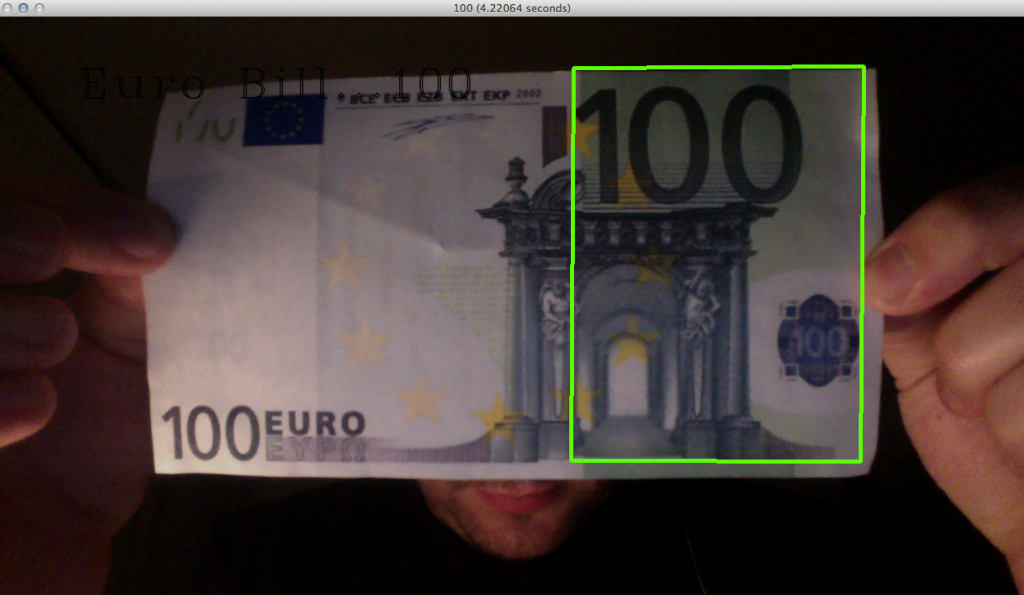

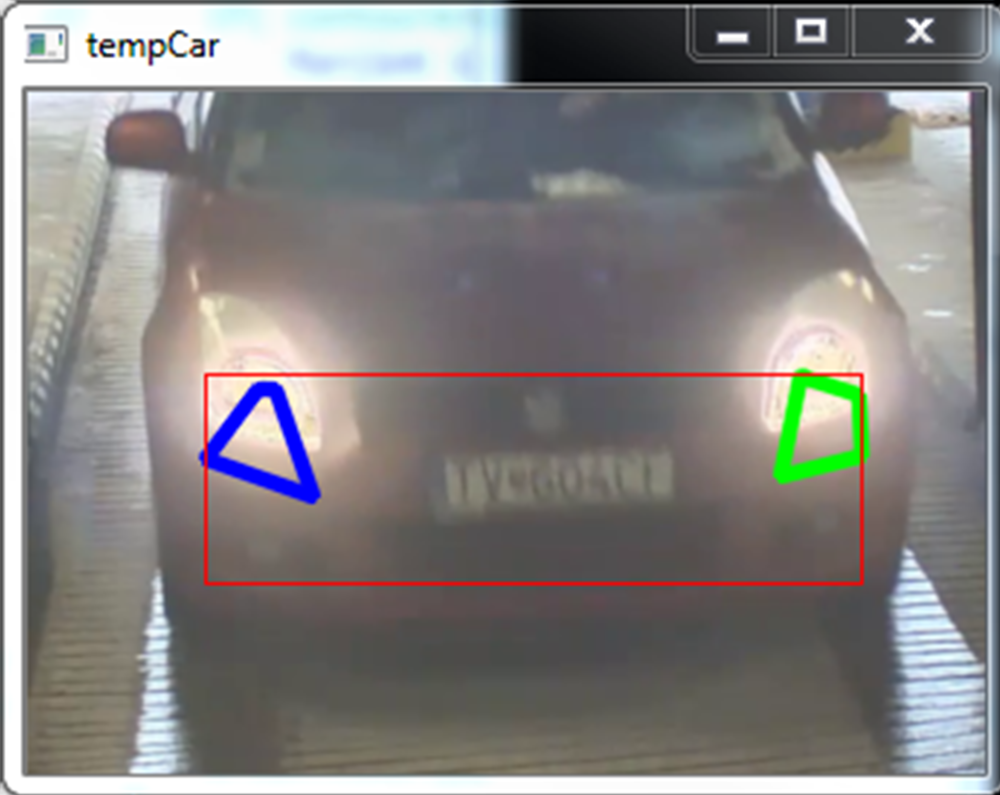

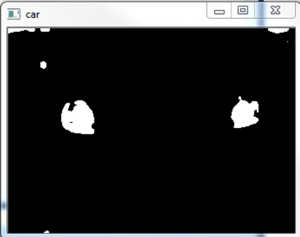

Sample

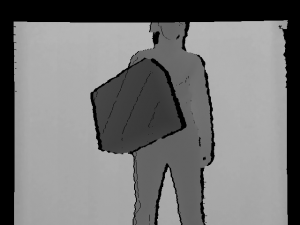

Result