Michal Viskup

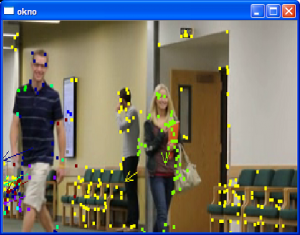

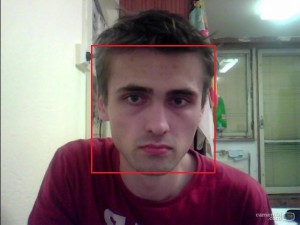

We detect and recognize the human faces in the video stream. Each face in the video is either recognized and the label is drawn next to their facial rectangle or it is labelled as unknown.

The video stream is obtained using Kinect v2 sensor. This sensor offers several data streams, we mention only the 2 relevant for our work:

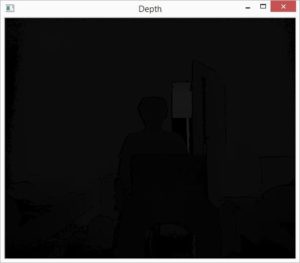

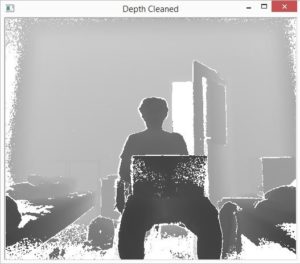

- RGB stream (resolution: 1920×1080, depth: 8bits)

- Depth stream (resolution: 512×424, depth: 16bits)

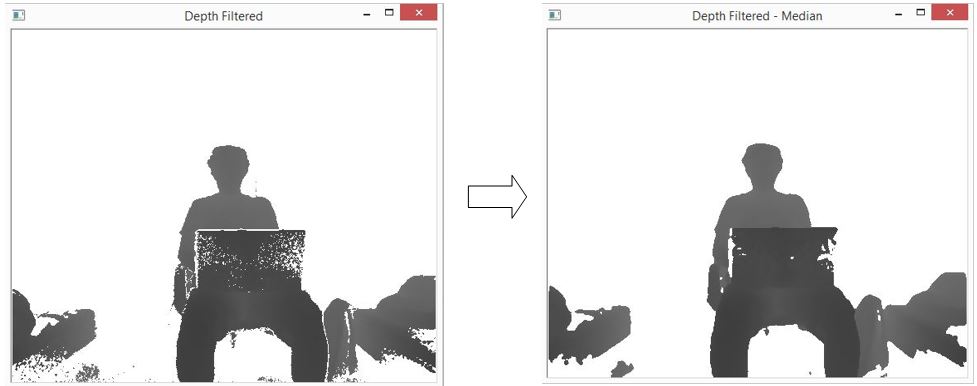

The RGB stream is self-explanatory. The depth stream consists of the values that denote the distance of the each pixel from the sensor. The reliable distance lays between the 50 mms and extends to 8 meters. However, past the 4.5m mark, the reliability of the data is questionable. Kinect offers the methods that map the pixels from RGB stream to Depth stream and vice-versa.

We utilize the facial data from RGB stream for the recognition. The depth data is used to enhance the face segmentation through the nose-tip detection.

First of all, the face recognizer has to be trained. The training is done only once. The state of the trained recognizer can be persisted in xml format and reloaded in the future without the need for repeated training. OpenCV offers implementation of three face recognition methods:

- Eigenfaces

- Fisherfaces

- Local Binary Pattern Histograms

We used the Eigenfaces and Fisherfaces method. The code for creation of the face recognizer follows:

void initRecognizer()

{

Ptr<FaceRecognizer> fr;

fr = createEigenFaceRecognizer();

trainRecognizer();

}

It is simple as that. Face recognizer that uses the Fisherfaces method can be created accordingly. The Ptr interface ensures the correct memory management.

All the faces presented to such recognizer would be labelled as unknown. The recognizer is not trained yet. The training requires the two vectors:

- The vector of facial images in the OpenCV Mat format

- The vector of integer values containing the identifiers for the facial images

These vectors can be created manually. This however is not sufficient for processing the large training sets. We thus provide the automated way to create these vectors. Data for each subject should be placed in a separate directory. Directories containing the subject data should be places within the single directory (referred to as root directory). The algorithm is given an access to the root directory. It processes all the subject directories and creates both the vector images and the vector labels. We think that the Windows API for accessing the file system is inconvenient. On the other hand, UNIX based systems offer convenient C API through the Dirent interface. Visual Studio compiler lacks the dirent interface. We thus used an external library to gain access to this convenient interface (http://softagalleria.net/dirent.php). Following code requires the library to run:

First we obtain the list of subject names. These stand for the directory names within the root directory. The subject names are stored in the vector of string values. It can be initialized manually or using the text file.

Then, for each subject, the path to their directory is created:

std::ostringstream fullSubjectPath; fullSubjectPath << ROOT_DIRECTORY_PATH; fullSubjectPath << "\\"; fullSubjectPath << subjectName; fullSubjectPath << "\\";

We then obtain the list of file names that reside within the subject directory:

std::vector<std::string> DataProvider::getFileNamesForDirectory(const std::string subjectDirectoryPath)

{

std::vector<std::string> fileNames;

DIR *dir;

struct dirent *ent;

if ((dir = opendir(subjectDirectoryPath.c_str())) != NULL) {

while ((ent = readdir(dir)) != NULL) {

if ((strcmp(ent->d_name, ".") == 0) || (strcmp(ent->d_name, "..") == 0))

{

continue;

}

fileNames.push_back(ent->d_name);

}

closedir(dir);

}

else {

std::cout << "Cannot open the directory: ";

std::cout << subjectDirectoryPath;

}

return fileNames;

}

Then, the images are loaded and stored in vector:

std::vector<std::string> subjectFileNames = getFileNamesForDirectory(fullSubjectPath.str());

std::vector<cv::Mat> subjectImages;

for (std::string fileName : subjectFileNames)

{

std::ostringstream fullFileNameBuilder;

fullFileNameBuilder << fullSubjectPath.str();

fullFileNameBuilder << fileName;

cv::Mat subjectImage = cv::imread(fullFileNameBuilder.str());

subjectImages.push_back(subjectImage);

}

return subjectImages;

In the end, label vector is created:

for (int i = 0; i < subjectImages.size(); i++){

trainingLabels.push_back(label);

}

With images and labels vectors ready, the training is a one-liner:

fr->train(images,labels);

The recognizer is trained. What we need now is a video and depth stream to recognize from.

Kinect sensor is initialized by the following code:

void initKinect()

{

HRESULT hr;

hr = GetDefaultKinectSensor(&kinectSensor);

if (FAILED(hr))

{

return;

}

if (kinectSensor)

{

// Initialize the Kinect and get the readers

IColorFrameSource* colorFrameSource = NULL;

IDepthFrameSource* depthFrameSource = NULL;

hr = kinectSensor->Open();

if (SUCCEEDED(hr))

{

hr = kinectSensor->get_ColorFrameSource(&colorFrameSource);

}

if (SUCCEEDED(hr))

{

hr = colorFrameSource->OpenReader(&colorFrameReader);

}

colorFrameSource->Release();

if (SUCCEEDED(hr))

{

hr = kinectSensor->get_DepthFrameSource(&depthFrameSource);

}

if (SUCCEEDED(hr))

{

hr = depthFrameSource->OpenReader(&depthFrameReader);

}

depthFrameSource->Release();

}

if (!kinectSensor || FAILED(hr))

{

return;

}

}

The following function obtains the next color frame from Kinect sensor:

Mat getNextColorFrame()

{

IColorFrame* nextColorFrame = NULL;

IFrameDescription* colorFrameDescription = NULL;

ColorImageFormat colorImageFormat = ColorImageFormat_None;

HRESULT errorCode = colorFrameReader->AcquireLatestFrame(&nextColorFrame);

if (!SUCCEEDED(errorCode))

{

Mat empty;

return empty;

}

if (SUCCEEDED(errorCode))

{

errorCode = nextColorFrame->get_FrameDescription(&colorFrameDescription);

}

int matrixWidth = 0;

if (SUCCEEDED(errorCode))

{

errorCode = colorFrameDescription->get_Width(&matrixWidth);

}

int matrixHeight = 0;

if (SUCCEEDED(errorCode))

{

errorCode = colorFrameDescription->get_Height(&matrixHeight);

}

if (SUCCEEDED(errorCode))

{

errorCode = nextColorFrame->get_RawColorImageFormat(&colorImageFormat);

}

UINT bufferSize;

BYTE *buffer = NULL;

if (SUCCEEDED(errorCode))

{

bufferSize = matrixWidth * matrixHeight * 4;

buffer = new BYTE[bufferSize];

errorCode = nextColorFrame->CopyConvertedFrameDataToArray(bufferSize, buffer, ColorImageFormat_Bgra);

}

Mat frameKinect;

if (SUCCEEDED(errorCode))

{

frameKinect = Mat(matrixHeight, matrixWidth, CV_8UC4, buffer);

}

if (colorFrameDescription)

{

colorFrameDescription->Release();

}

if (nextColorFrame)

{

nextColorFrame->Release();

}

return frameKinect;

}

Analogous function obtains the next depth frame. The only change is the type and size of the buffer, as the depth frame is single channel 16 bit per pixel.

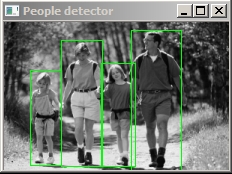

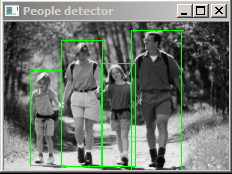

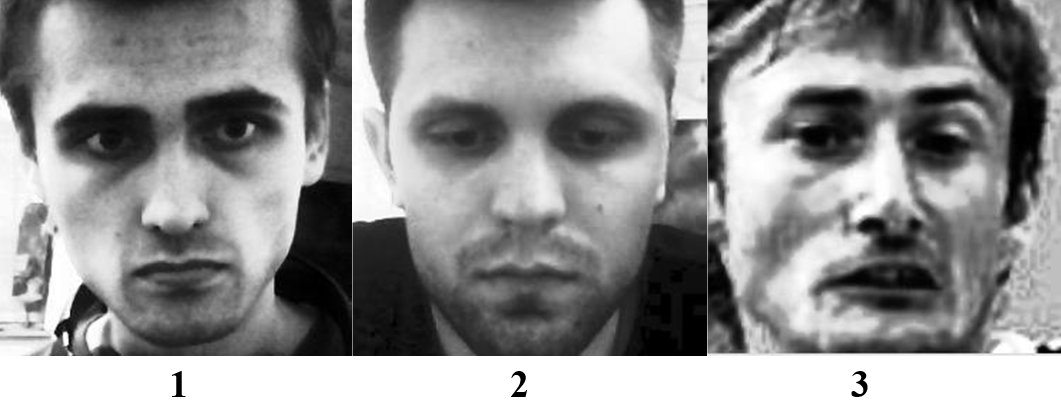

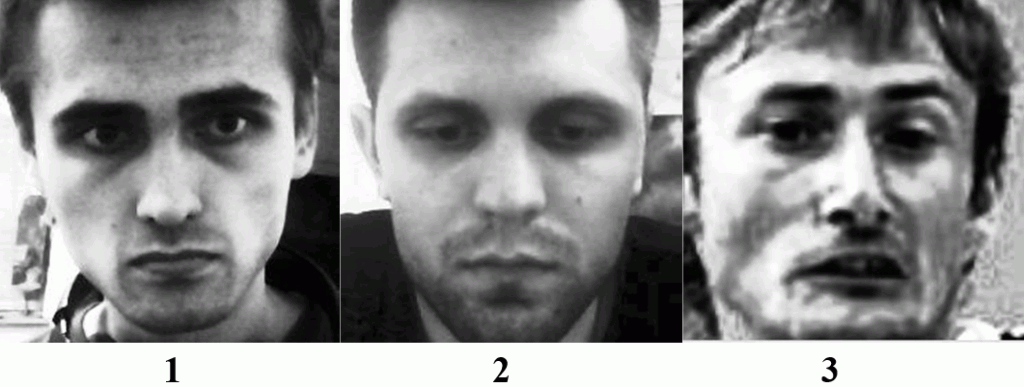

Finally, we are all set to do the recognition. The face recognition task consists of the following steps:

- Detect the faces in video frame

- Crop the faces and process them

- Predict the identity

For face detection, we use OpenCV CascadeClassifier. OpenCV provides the extracted features for the classifier for both the frontal and the profile faces. However, in video both the slight and major variations from these positions are present. We thus increase the tolerance for the false positives to prevent the cases when the track of the face is lost between the frames.

The classifier is simply initialized by loading the set of features using its load function.

CascadeClassifier cascadeClassifier; cascadeClassifier.load(PATH_TO_FEATURES_XML);

The face detection is done as follows:

vector<Mat> getFaces(const Mat frame, vector<Rect_<int>> &rectangles)

{

Mat grayFrame;

cvtColor(frame, grayFrame, CV_BGR2GRAY);

cascadeClassifier.detectMultiScale(grayFrame, rectangles, 1.1, 5);

vector<Mat> faces;

for (Rect_<int> face : rectangles){

Mat detectedFace = grayFrame(face);

Mat faceResized;

resize(detectedFace, faceResized, Size(240, 240), 1.0, 1.0, INTER_CUBIC);

faces.push_back(faceResized);

}

return faces;

}

With faces detected, we are set to proceed to recognition. The recognition process is as follows:

Mat colorFrame = getNextColorFrame();

vector<Rect_<int>> rectangles;

vector<Mat> faces = getFaces(colorFrameResized, rectangles);

int label = -1;

label = fr->predict(face);

string box_text = format("Prediction = %d", label);

putText(originalFrame, box_text, Point(rectangles[i].tl().x, rectangles[i].tl().y), FONT_HERSHEY_PLAIN, 1.0, CV_RGB(0, 255, 0), 2.0);

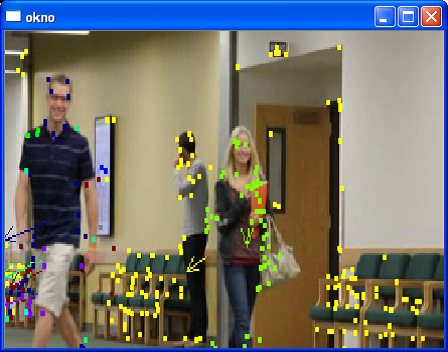

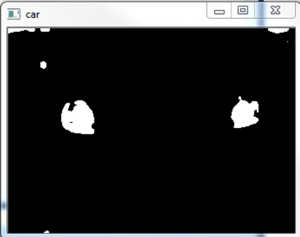

Nose tip detection is done as follows:

unsigned short minReliableDistance; unsigned short maxReliableDistance; Mat depthFrame = getNextDepthFrame(&minReliableDistance, &maxReliableDistance); double scale = 255.0 / (maxReliableDistance - minReliableDistance); depthFrame.convertTo(depthFrame, CV_16UC1, scale); // detect nose tip // only search for the nose tip in the head area Mat deptHeadRegion = depthFrame(rectangles[i]); // Nose is probably the local minima in the head area double min, max; Point minLoc, maxLoc; minMaxLoc(deptHeadRegion, &min, &max, &minLoc, &maxLoc); minLoc.x += rectangles[i].x; minLoc.y += rectangles[i].y; // Draw the circle at proposed nose position. circle(depthFrame, minLoc, 5, 255, -1);

To conclude, we provide a simple implementation that allows the detection and recognition of human faces within a video. The room for improvement is that rather than allowing more false positives in detection phase, the detected nose tip can be used for face tracking.