Depth cues are important aspects that influence the visual saliency of objects around us. However, the depth aspect and its quantified impact on the visual saliency has not yet been thoroughly examined in real environments. We designed and carried out an experimental study to examine the influence of the depth cues on the visual saliency of the objects at the scene. The experimental study took place with 28 participants under laboratory conditions with the objects in various depth configurations at the real scene. Visual attention data were measured by the wearable eye-tracking glasses. .. more

Depth cues are important aspects that influence the visual saliency of objects around us. However, the depth aspect and its quantified impact on the visual saliency has not yet been thoroughly examined in real environments. We designed and carried out an experimental study to examine the influence of the depth cues on the visual saliency of the objects at the scene. The experimental study took place with 28 participants under laboratory conditions with the objects in various depth configurations at the real scene. Visual attention data were measured by the wearable eye-tracking glasses. .. more

Author: Patrik Polatsek

Color Saliency

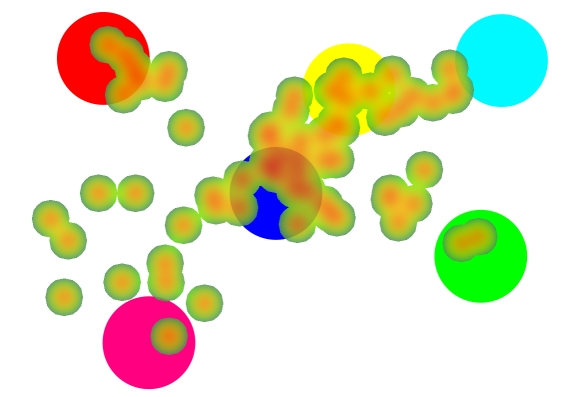

Color is a fundamental component of visual attention. Saliency is usually associated with color contrasts. Besides this bottom-up perspective, some recent works indicate that psychological aspects should be considered too. However, relatively little research has been done on the potential impacts of color psychology on attention. To our best knowledge, a publicly available fixation dataset specialized in color features does not exist. We, therefore, conducted a novel eye-tracking experiment with color stimuli. We studied fixations of 15 participants to find out whether color differences can reliably model color saliency or particular colors are preferably fixated regardless of scene content, i.e. color prior. … more

Color is a fundamental component of visual attention. Saliency is usually associated with color contrasts. Besides this bottom-up perspective, some recent works indicate that psychological aspects should be considered too. However, relatively little research has been done on the potential impacts of color psychology on attention. To our best knowledge, a publicly available fixation dataset specialized in color features does not exist. We, therefore, conducted a novel eye-tracking experiment with color stimuli. We studied fixations of 15 participants to find out whether color differences can reliably model color saliency or particular colors are preferably fixated regardless of scene content, i.e. color prior. … more

Effects of individual’s emotions on saliency and visual search

Patrik Polatsek, Miroslav Laco, Å imon Dekrét, Wanda Benesova, Martina Baránková, Bronislava Strnádelová, Jana Koróniová, Mária GablÃková

Abstract.

While psychological studies have confirmed a connection between emotional stimuli and visual attention, there is a lack of evidence, how much influence individual’s mood has on visual information processing of emotionally neutral stimuli. In contrast to prior studies, we explored if bottom-up low-level saliency could be affected by positive mood. We therefore induced positive or neutral emotions in 10 subjects using autobiographical memories during free-viewing, memorizing the image content and three visual search tasks. We explored differences in human gaze behavior between both emotions and relate their fixations with bottom-up saliency predicted by a traditional computational model. We observed that positive emotions produce a stronger saliency effect only during free exploration of valence-neutral stimuli. However, the opposite effect was observed during task-based analysis. We also found that tasks could be solved less efficiently when experiencing a positive mood and therefore, we suggest that it rather distracts users from a task.

download: saliency-emotions

Please cite this paper if you use the dataset:

Polatsek, P., Laco, M., Dekrét, Å ., Benesova, W., Baránková, M., Strnádelová, B., Koróniová, J., & GablÃková, M. (2019)

Effects of individual’s emotions on saliency and visual search

Computational Models of Shape Saliency

Patrik Polatsek, Marek Jakab, Wanda Benesova, Matej Kužma

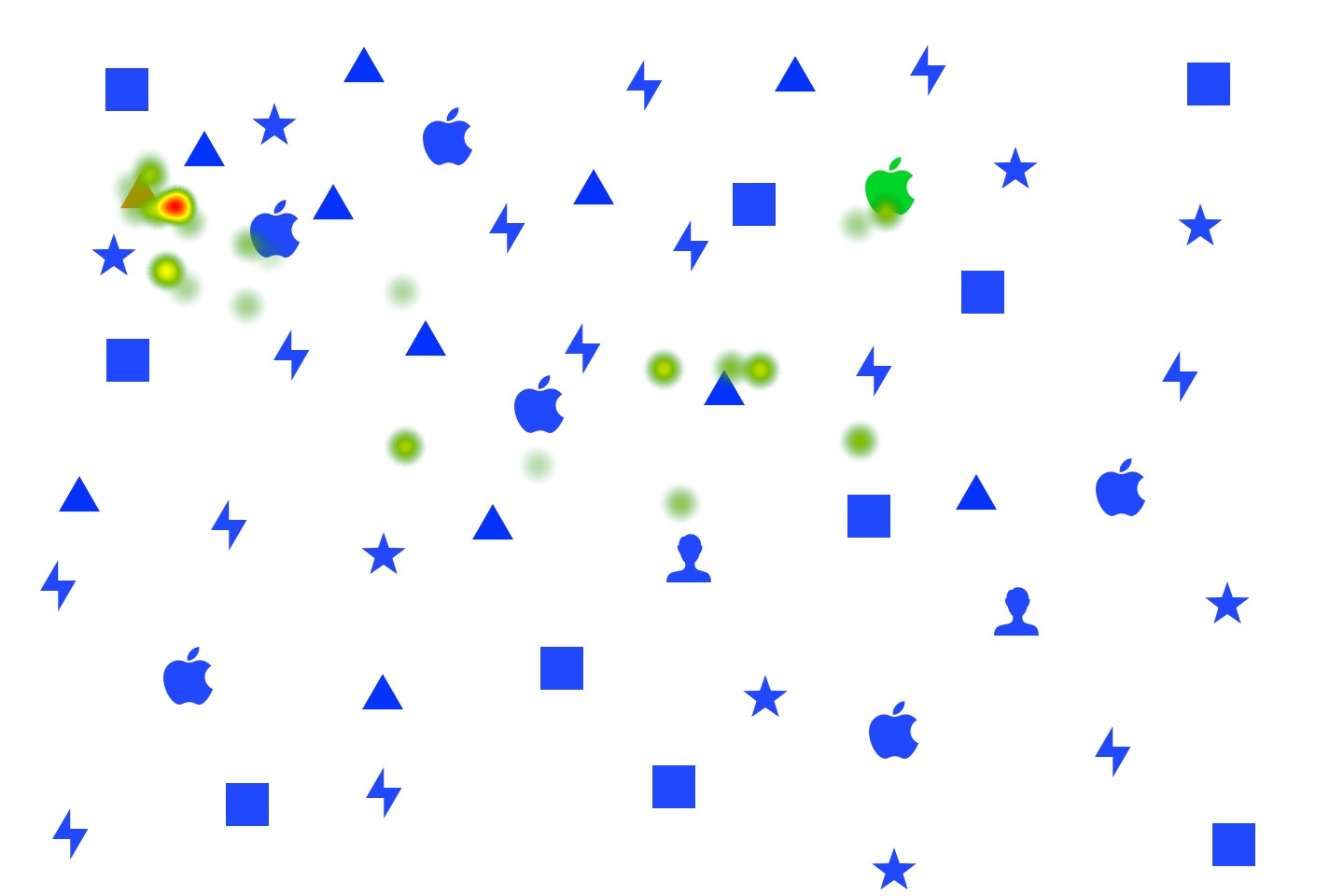

Abstract. Computational models predicting stimulus-driven human visual attention usually incorporate simple visual features, such as intensity, color and orientation. However, saliency of shapes and their contour segments influence attention too. Therefore, we built 30 own shape saliency models based on existing shape representation and matching techniques and compared them with 5 existing saliency methods. Since available fixation datasets were usually recorded on natural scenes where various factors of attention are present, we performed a novel eye-tracking experiment that primarily focuses on shape and contour saliency. Fixations from 47 participants who looked at silhouettes of abstract and realworld objects were used to evaluate the accuracy of proposed saliency models and investigate which shape properties are most attentive. The results showed that visual attention integrates local contour saliency, saliency of global shape features and shape dissimilarities. Fixation data also showed that intensity and orientation contrasts play an important role in shape perception. We found that humans tend to fixate first irregular geometrical shapes and objects whose similarity to a circle is different from other objects.

shapeSal dataset contains an extended version of this eye-tracking experiment including images and fixation data (73 participants, 158 scenes).

download:Â shapeSal.zip [V2.0; update: 25.3.2019]

Please cite this paper if you use the dataset:

Polatsek, P., Jakab, M., Benesova, W., & Kužma, M. (2019)

Computational Models of Shape Saliency

11th International Conference on Machine Vision (ICMV 2018) (Vol. 11041)

International Society for Optics and Photonics

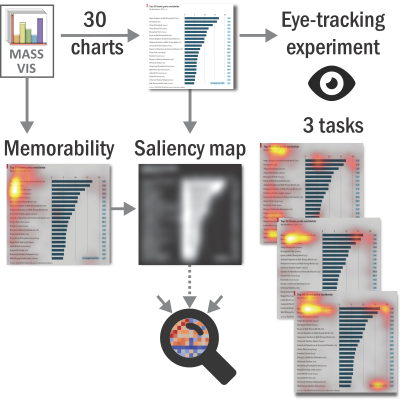

Exploring Visual Attention and Saliency Modeling for Task-Based Visual Analysis

Patrik Polatsek, Manuela Waldner, Ivan Viola, Peter Kapec, Wanda Benesova

Abstract. Memory, visual attention, and perception play a critical role in the design of visualizations. The way users observe a visualization is affected by salient stimuli in a scene as well as by domain knowledge, interest, and the task. While recent saliency models manage to predict the users’ visual attention in visualizations during exploratory analysis, there is little evidence of how much influence bottom-up saliency has on task-based visual analysis. Therefore, we performed an eye-tracking study with 47 users to determine the users’ path of attention when solving three low-level analytical tasks using 30 different charts from the MASSVIS database. We also compared our task-based eye-tracking data to the data from the original memorability experiment by Borkin et al.. We found that solving a task leads to more consistent viewing patterns compared to exploratory visual analysis. However, bottom-up saliency of visualization has negligible influence on users’ fixations and task efficiency when performing a low-level analytical task. Also, the efficiency of visual search for an extreme target data point is barely influenced by the target’s bottom-up saliency. Therefore, we conclude that bottom-up saliency models tailored toward information visualization are not suitable for predicting visual attention when performing task-based visual analysis. We discuss potential reasons and suggest extensions to visual attention models to better account for task-based visual analysis.

TASKVIS dataset contains eye-tracking data from this task-based visual analysis experiment.

download: taskvis.zip

Please cite this paper if you use the dataset:

Polatsek, P., Waldner, M., Viola, I., Kapec, P., & Benesova, W. (2018)

Exploring Visual Attention and Saliency Modeling for Task-Based Visual Analysis

Computers & Graphics, 72, 26-38