Marek Grznar

Introduction

In our project we focus on simple object recognition, then tracking this recognized object and finally we try to delete this object from video. By object recognition we used local features-based methods. We compare SIFT and SURF methods for detection and description. By RANSAC algorithm we compute the homography. In case these algorithms successfully find the object we create a mask where recognized object was white area and the rest was black. By object tracking we compared the two approaches. The first approach is based on calculating optical flow using the iterative Lucas-Kanade method with pyramids. The second approach is based on camshift tracking algorithm. For deleting the object from video we focus to using algorithm based on restoring the selected region in an image using the region neighborhood.

Used functions: floodFill, findHomography, match, fillPoly, goodFeaturesToTrack, calcOpticalFlowPyrLK, inpaint, mixChannels, calcHist, CamShift

Solution

- Opening video file, retrieve the next frame (picture), converting from color image to grayscale

cap.open("Video1.mp4"); cap >> frame; frame.copyTo(image); cvtColor(image, gray, COLOR_BGR2GRAY); - Find object in frame (picture)

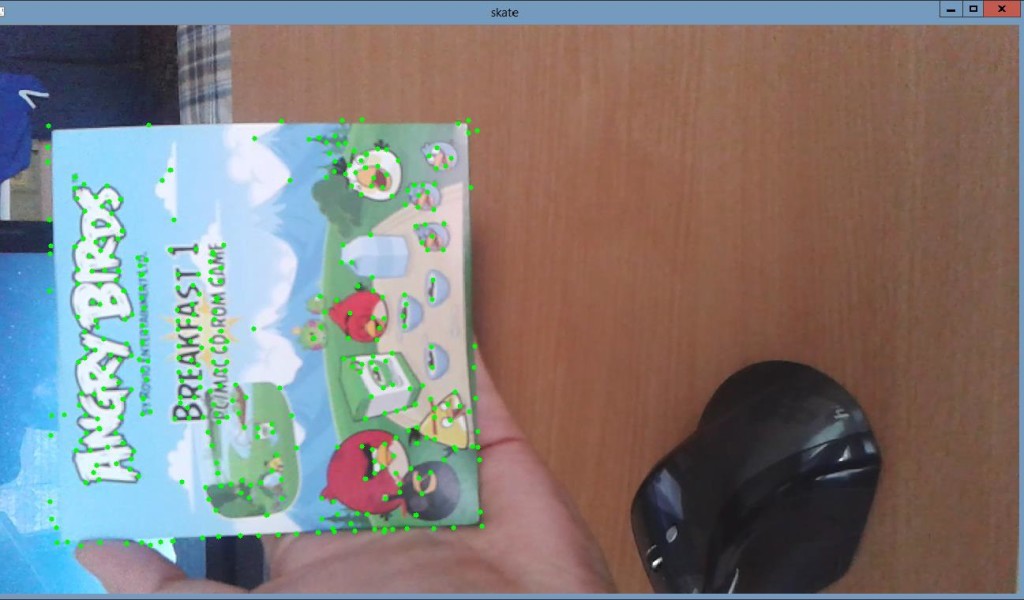

- Keypoints detection and description (SIFT/SURF)

// SiftFeatureDetector detector( minHessian ); SurfFeatureDetector detector( minHessian ); std::vector<KeyPoint> keypoints_object, keypoints_scene; detector.detect(img_object, keypoints_object); detector.detect(img_scene, keypoints_scene); // SiftDescriptorExtractor extractor; SurfDescriptorExtractor extractor; Mat descriptors_object, descriptors_scene; extractor.compute(img_object, keypoints_object, descriptors_object); extractor.compute(img_scene, keypoints_scene, descriptors_scene);

- Matching keypoints

FlannBasedMatcher matcher; std::vector< DMatch > matches; matcher.match( descriptors_object, descriptors_scene, matches );

- Homography calculating

Mat H = findHomography( obj, scene, CV_RANSAC );

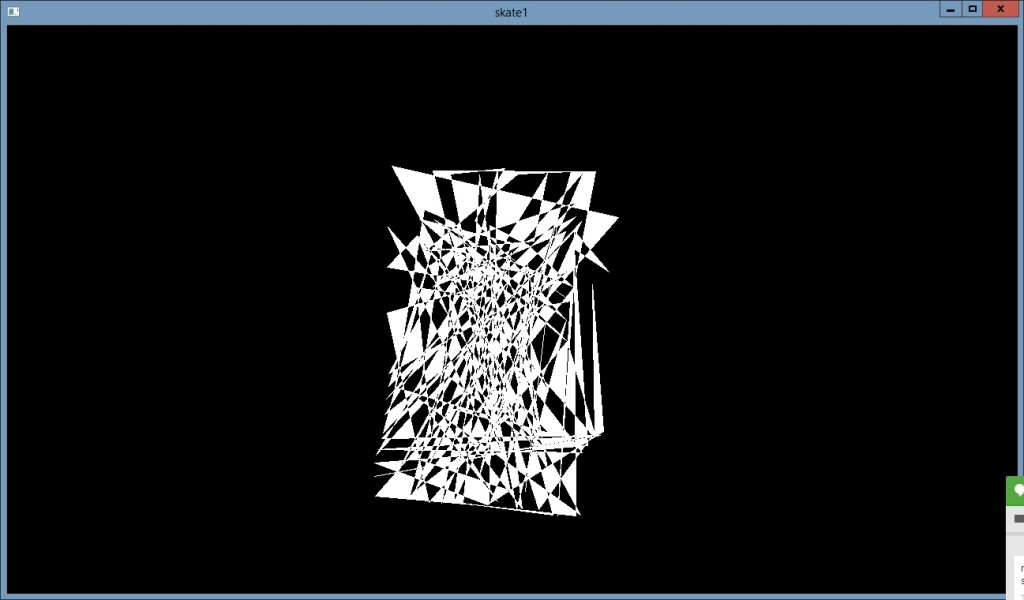

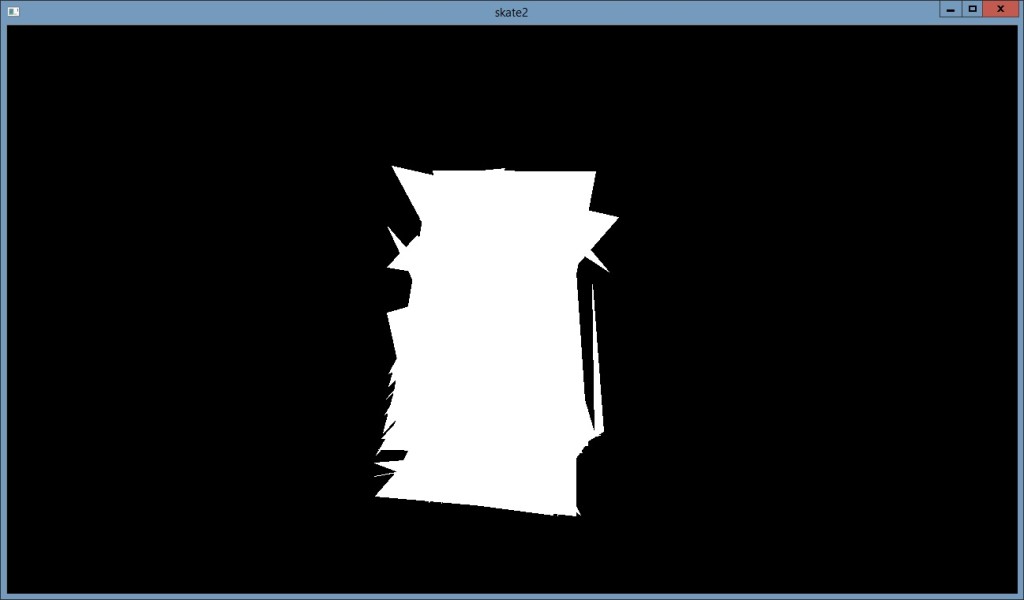

- Mask creating

cv::Mat mask(img_scene.size().height,img_scene.size().width,CV_8UC1); mask.setTo(Scalar::all(0)); cv::fillPoly(mask,&pts, &n, 1, Scalar::all(255));

- Keypoints detection and description (SIFT/SURF)

First tracking approach

- Find significant points in current frame (using mask with recognized object)

- Find significant points from the previous frame to the next

- Deleting object from image

- Calculate mask of current object position

- Modify mask of current object position

- Restore the selected region in an image using the region neighborhood.

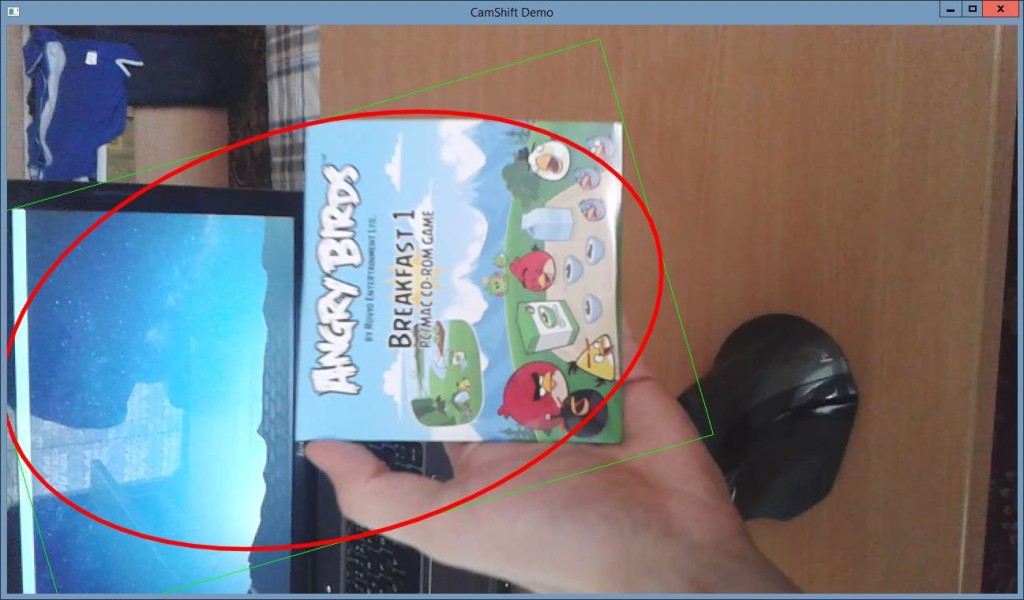

Second tracking approach

- Calculate histogram of ROI

- Calculate the back projection of histogram

- Track object using camshift

Object recognition

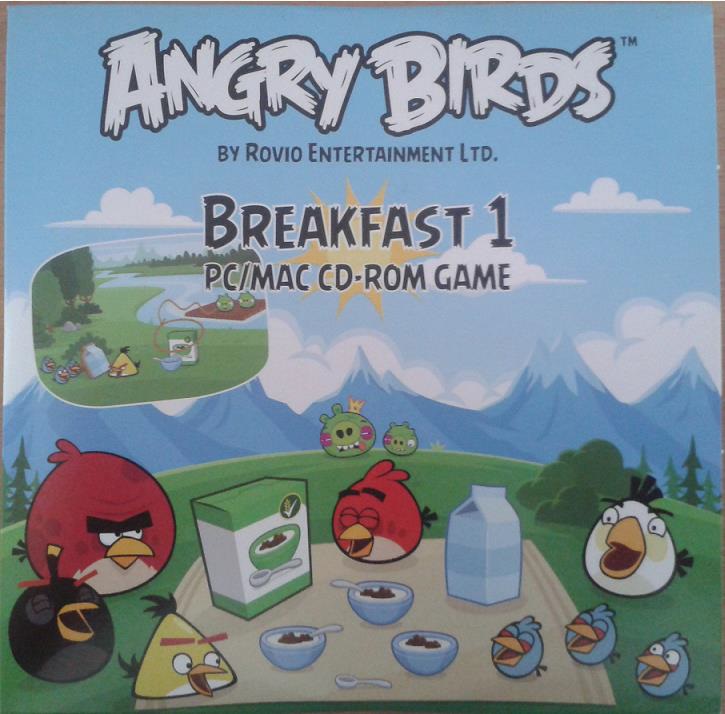

Input

Outputs

Tracking object

Input (tracked object)

Outputs