Filip Mazan

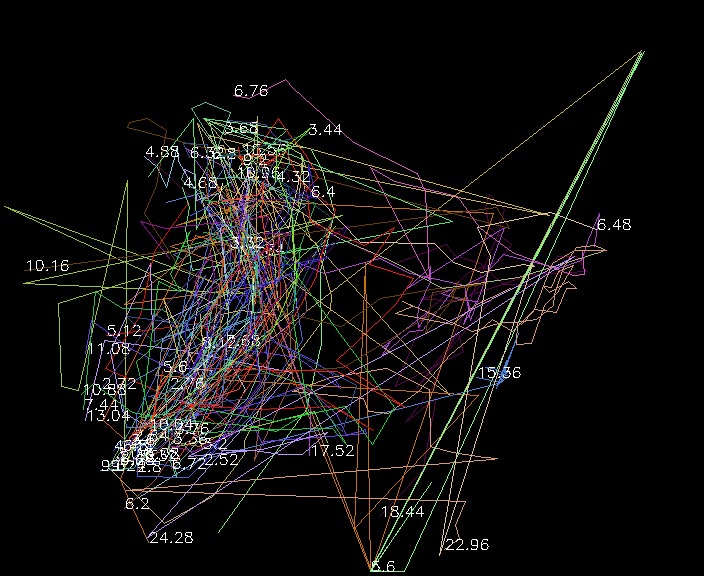

This project deals with analysis of video captures from CCTVs to detect people’s motion and extract their trajectories in time. The output of this project is a relatively short video file containing only frames of the original where the movement was detected along with shown trajectories of people. The second output consists of cumulative image of all trajectories. This can be later used to classify trajectories as (not) suspicious.

- Each frame of input video is converted into grayscale and median filtered to remove noise

- First 30 seconds of video is used as a learning phase for MOG2 background subtractor

- For each next frame the MOG2 mask is calculated and morphological closing is applied to it

- If count of non-zero pixels is greater than a set threshold, we claim there is a movement present

- Good features to track are found if there is not many left

- Optical flow is calculated

- Each point which has moved is stored along with frame number

- If there is no movement on current frame, postprocess last movement interval (if any)

- All stored tracking points (x, y, frame number) from previous phase are clusterized by k-means into variable number of centroids

- All centroids are sorted by their frame number dimension

- Trajectory is drawn onto the output frame

- Movement sequence is written into the output video file along with continuously drawn trajectory

- All stored tracking points (x, y, frame number) from previous phase are clusterized by k-means into variable number of centroids

Following image shows the sum of all trajectories found in 2 hours long input video. This can be used to classify trajectories as (not) suspicious.