Peter Demcak

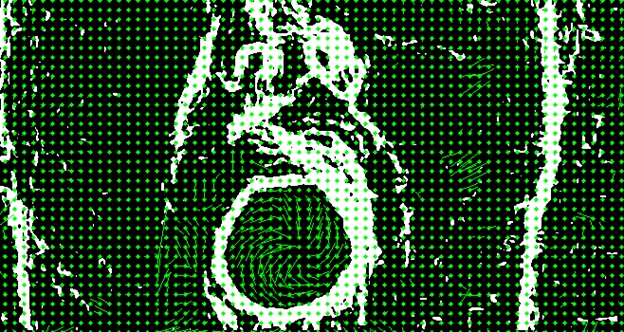

In this project, we aim to recognize the gestures made by the users by moving their lips; Examples: closed mouth, mouth open, mouth wide open, puckered lips. The challenges in this task are the high homogeneity in the observed area, and the rapidity of lip movements. Our first attempts in detecting said gestures are based on the detection of the lip movements through flow with the Farneback method implemented in OpenCV, or alternatively the calculation of the motion gradient from a silhouette image. It appears, that these methods might not be optimal for the solution of this problem.

OpenCV functions: cvtColor, Sobel, threshold, accumulateWeighted, calcMotionGradient, calcOpticalFlowPyrLK

Process

- Detect the position of the largest face in the image using OpenCV cascade classifier. Further steps will be applied using the lower half of the found face.

faceRects = detect(frame, faceClass);

- Transform the image map to HLS color space, and obtain the luminosity map of the image

- Combine the results of horizontal and vertical Sobel methods to detect edges of the face features.

Sobel(hlsChannels[1], sobelVertical, CV_32F, 0, 1, 9); Sobel(hlsChannels[1], sobelHorizontal, CV_32F, 1, 0, 9); cartToPolar(sobelHorizontal, sobelVertical, sobelMagnitude, sobelAngle, false);

- Add accumulative edge detection frame images on top of each other to obtain the silhouette image. To prevent raised noise in areas without edges, apply a threshold to the Sobel map.

threshold(sobelMagnitude, sobelMagnitude, norm(sobelMagnitude, NORM_INF)/6, 255, THRESH_TOZERO); accumulateWeighted(sobelMagnitude, motionHistoryImage, intensityLoss);

- Calculate the flow using the Farneback method implemented in OpenCV using the current and previous frame

calcOpticalFlowFarneback(prevSobel, sobelMagnitudeCopy, flow, 0.5, 3, 15, 3, 5, 1.2, 0);