Introduction

The source code of our method can be found at – https://github.com/IgorJanos/stuFieldReg-official

The official repository of the Calib360 dataset can be found at – https://github.com/IgorJanos/stuCalib360

The best pre-trained models can be downloaded at – https://vggnas.fiit.stuba.sk/download/janos/fieldreg/fieldreg-experiments.tar.gz

You can find our original paper at – … URL …

Dataset

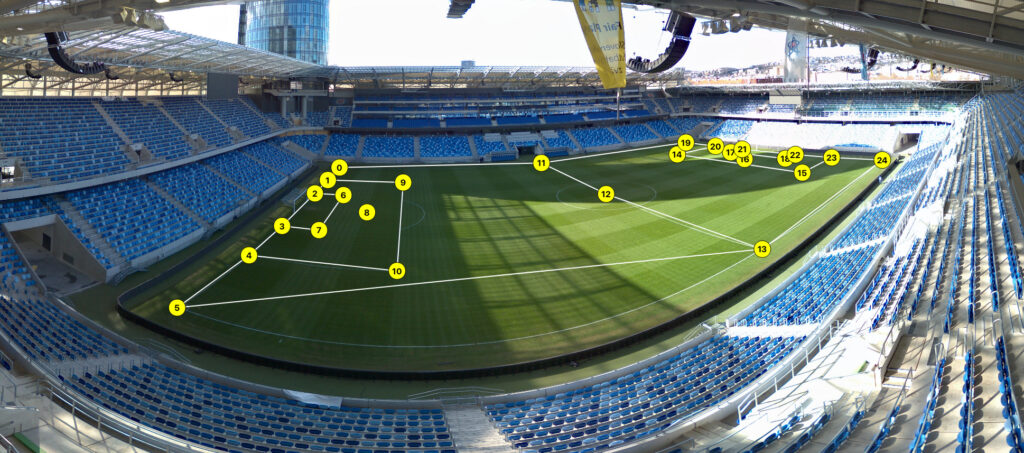

We propose the Calib360 dataset, which contains 300,000 training images with accurate homography annotations, which we have constructed from equirectangular panoramas. In panoramas, we annotate the positions of known keypoints of the football playing field model.

Next, from the annotated keypoints we extract the panoramic camera position and orientation with respect to the playing field. Once the camera parameters are extracted, we can generate unlimited number of different views of the playing field by adjusting the viewing angles and field of view. These generated images are perfect for training of neural networks because they all conform to the pinhole camera model and contain accurate annotations.

Results

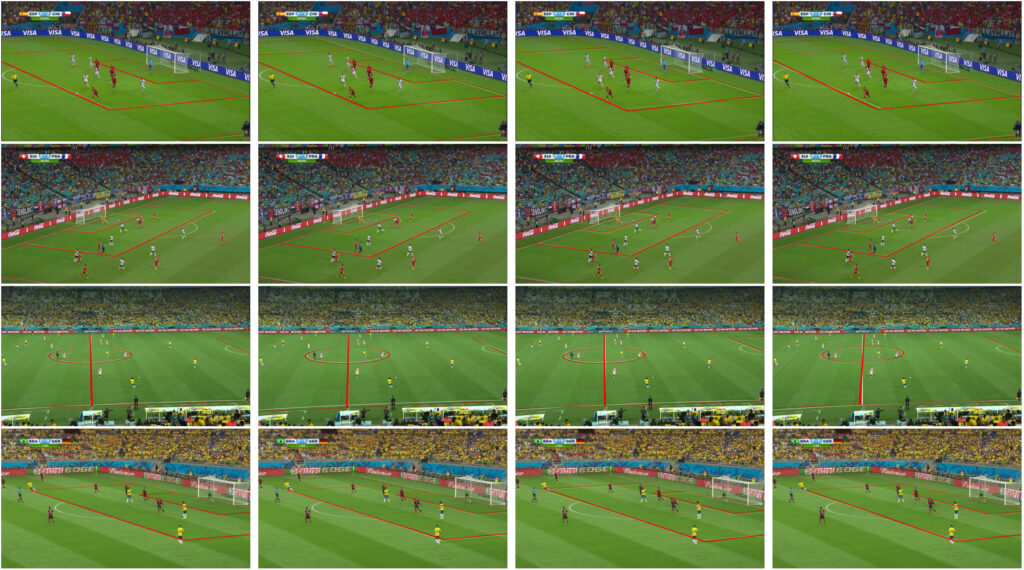

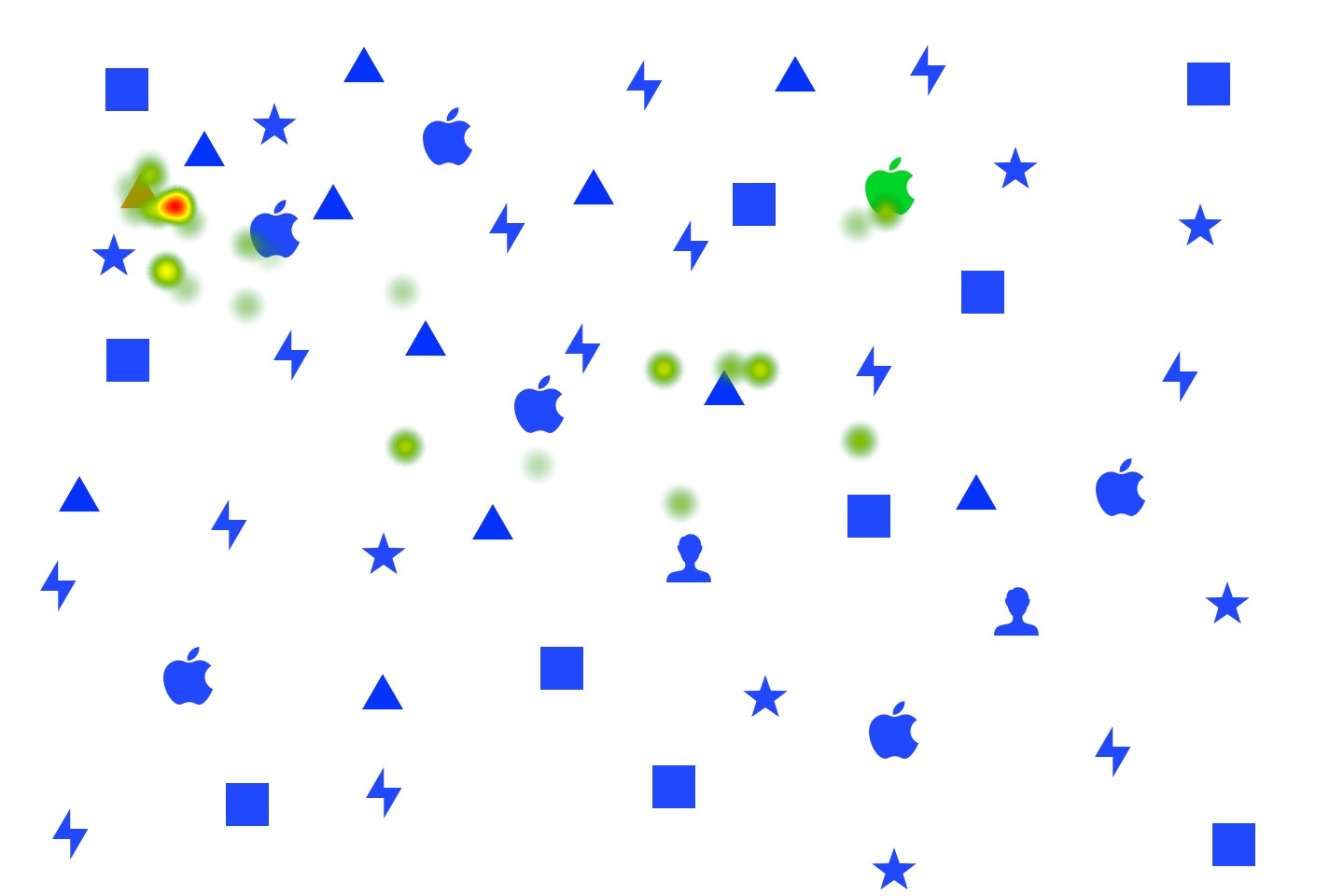

Estimated playing field registration by our method (first column), by the method of Nie et al. (2021, second column), by the method of Chu et al. (2022, third column), and by the method of Theiner et al. (2023, fourth column).