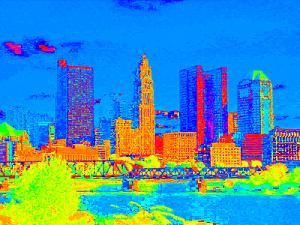

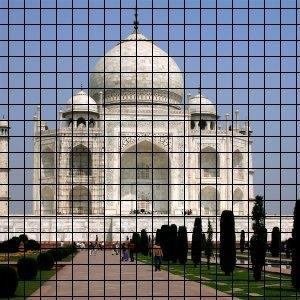

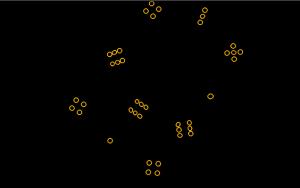

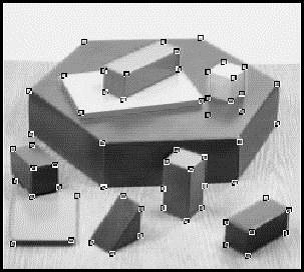

Project is focused on the image detection which major components are cities and buildings. Buildings and cities detection assumes occurence of the edges as implication of the Windows and walls, as well as presence of the sky. Algorithm creates the feature vector with SVM classification algorithm.

Functions used: HoughLinesP, countNonZero, Sobel, threshold, merge, cvtColor, split, CvSVM

The process

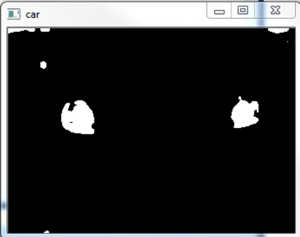

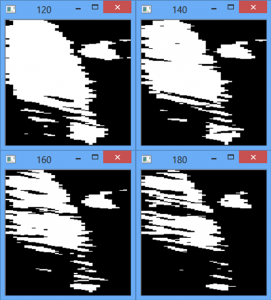

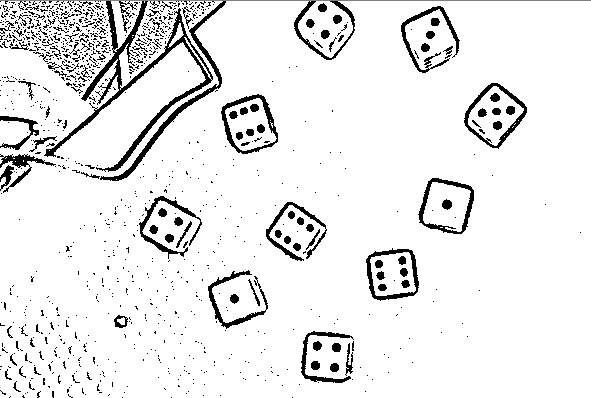

- Create edge image

cv::Sobel(intput, grad_x, CV_16S, 1, 0, 3, 1, 0, cv::BORDER_DEFAULT); cv::Sobel(intput, grad_y, CV_16S, 0, 1, 3, 1, 0, cv::BORDER_DEFAULT);

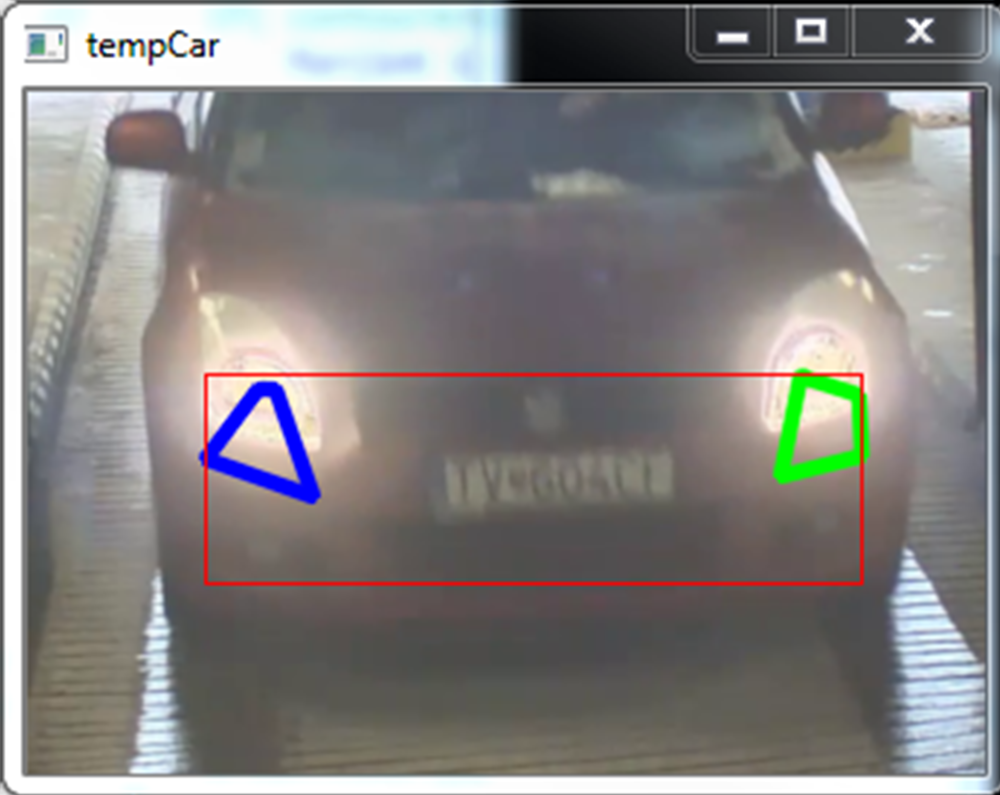

- Find lines in the binary edge image

cv::HoughLinesP(edgeImage, edgeLines, 1, CV_PI / 180.0, 1, 10, 0);

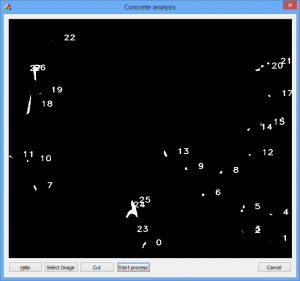

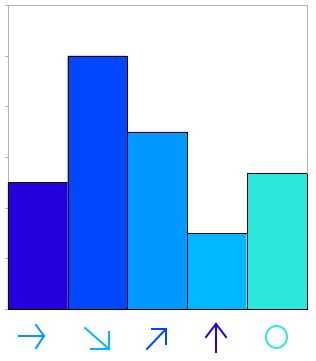

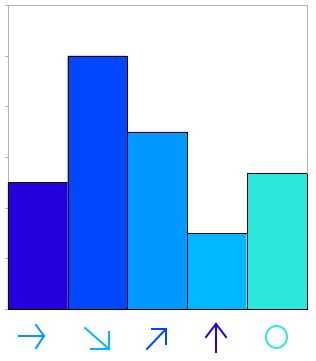

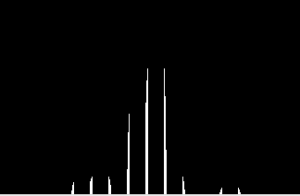

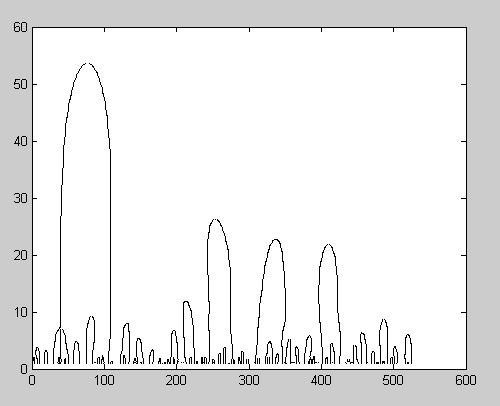

- Count numbers of lines in specified tilt

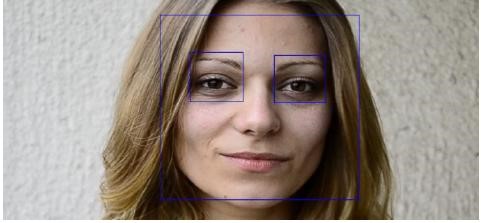

- Convert original image to HSV color space and remove saturation and value

cv::cvtColor(src, hsv, CV_BGR2HSV);

- Process the image from top to bottom , if pixel is not blue then all pixels under him are not sky

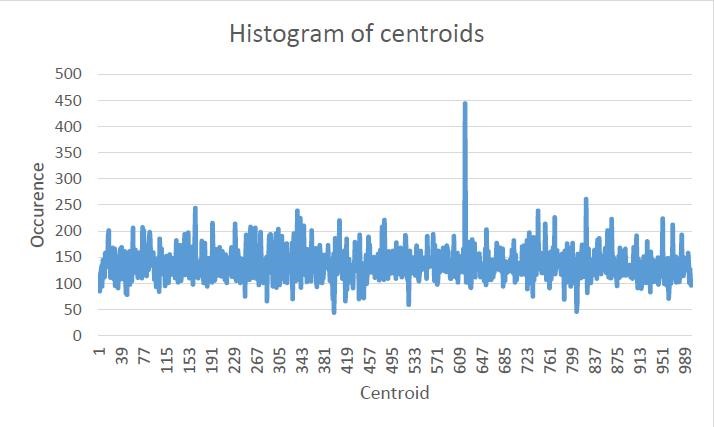

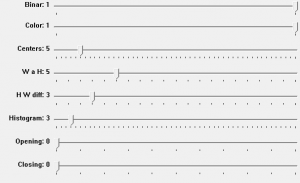

- Classification with SVM

CvSVMParams params; params.svm_type = CvSVM::C_SVC; params.kernel_type = CvSVM::LINEAR; params.term_crit  = cvTermCriteria(CV_TERMCRIT_ITER, 5000, 1e-5); float OpencvSVM::predicate(std::vector<float> features) { std::vector<std::vector<float> > featuresMatrix; featuresMatrix.push_back(features); cv::Mat featuresMat = createMat(featuresMatrix); return SVM.predict(featuresMat); }

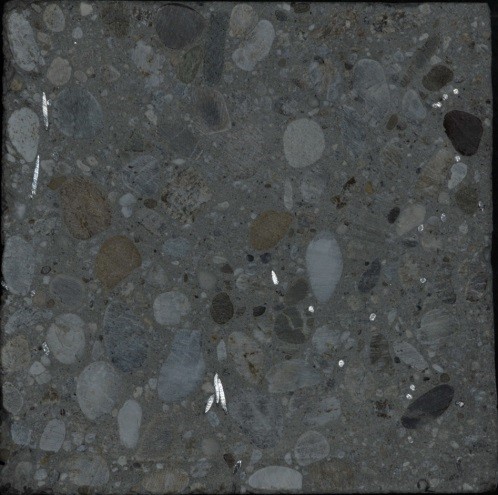

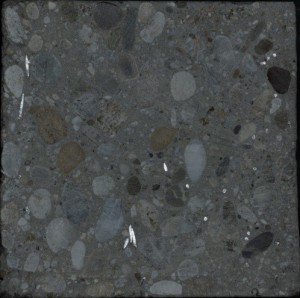

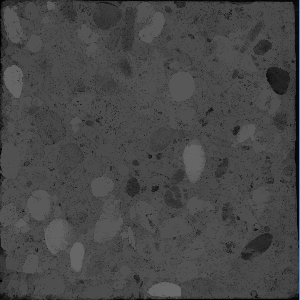

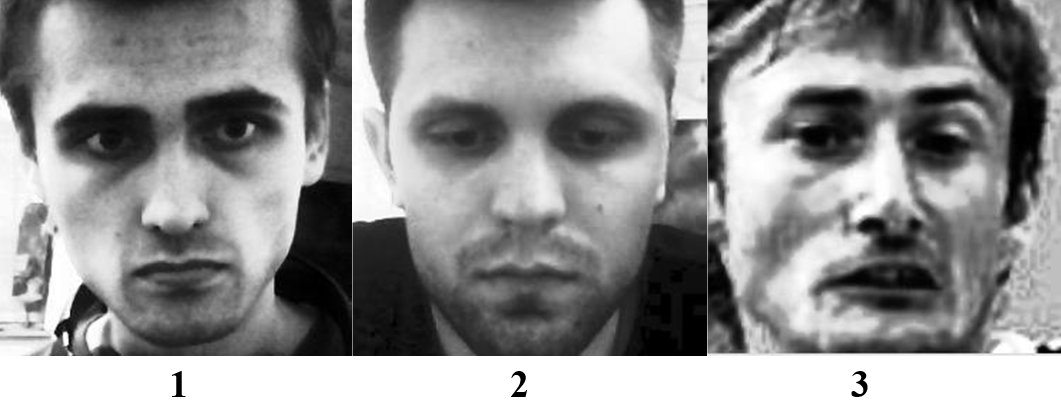

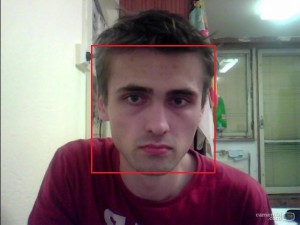

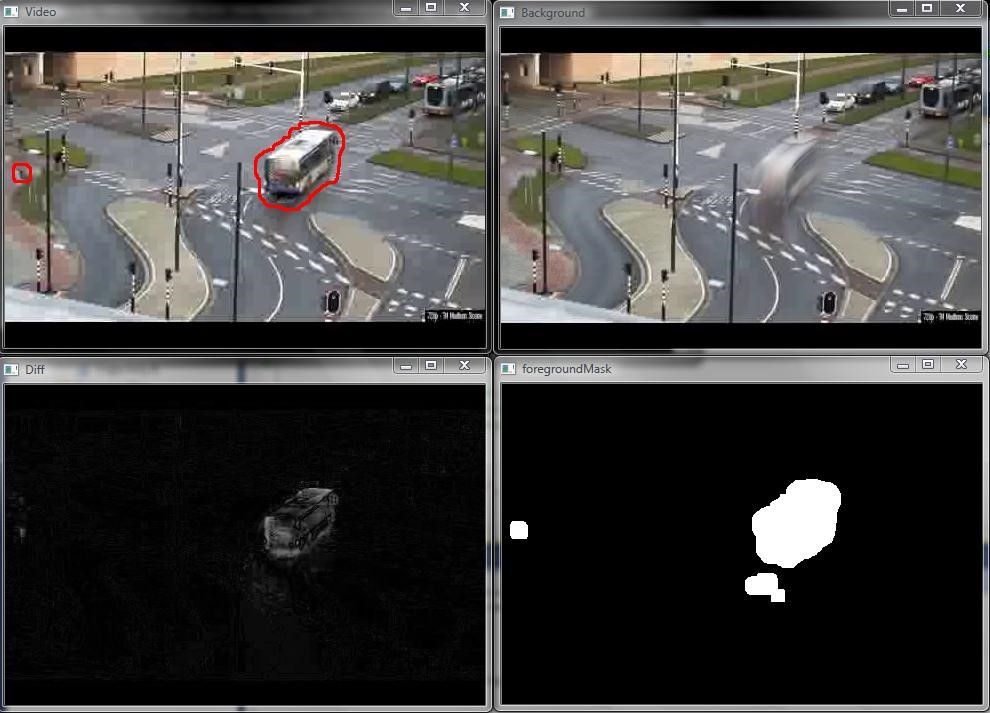

Example